J.E. Gordon, a leading engineer at the Royal Aircraft Establishment at Farnborough and holder of the British Silver Medal of the Royal Aeronautical Society, wrote two brilliant books on engineering: “The New Science of Strong Materials” and “Structures – Or Why Things Don’t Fall Down”. Elon Musk has recommended the latter of the two books, and I can only encourage you to read both. In my eyes, the role of a good non-fiction writer is to explain the intricacies of a non-trivial topic that we can see all around us but nevertheless rarely fully appreciate. Something interesting hidden in plain sight, if you will.

With this in mind, let’s discuss an underappreciated topic from the world of materials science.

First of all, what do we mean by a material’s stiffness and strength?

To be able to compare the load and deformation acting on components of different sizes, engineers prefer to use the quantities of stress and strain over load and deformation. Imagine a solid rod of a certain diameter and length which is being pulled apart in tension. Naturally, two rods of the same material but of different diameters and lengths will deform by different amounts. However, if both rods are stressed by the same amount, then they will experience the same amount of strain. In our simple one-dimensional rod example, the stress is given by

where is the tensile force and

is the cross-sectional area for a diameter

, i.e. force normalised by cross-sectional area.

The engineering strain is given by

where is the change in length (deformation) of the rod and

is its original length, i.e. the deformation normalised by original length.

For an elastic material deforming linearly (i.e. no plastic deformation), the ratio of stress to strain is constant, and for our simple one-dimensional example the constant of proportionality is equal to the stiffness of the material.

(Hooke’s Law).

This stiffness is known as the Young’s modulus of the material.

These two definitions of stress and strain illustrate a simple point. By dividing force by cross-sectional area and change in length (deformation) by original length, the role of geometry is eliminated entirely. This means we can deal purely in terms of material properties, i.e. Young’s modulus (stiffness), stress to failure (strength), etc., and can therefore compare the degree of loading (stress) and deformation (strain) in components of different sizes, shapes, dimensions, etc.

We can all appreciate that metals are incredibly strong and stiff. But why are some materials stronger and stiffer than others? Why don’t all materials have the same strength and stiffness? Aren’t all materials just an assemblage of molecules and atoms whose molecular bonds stretch and eventually separate upon fracture? If this is so, why don’t all materials break at the same value of stress and strain?

The stiffness and strength of a material does indeed depend on the relative stiffness and strength of the underlying chemical bonds, and these do vary from material to material. But this difference is not sufficient to explain the large variations in strength that we observe for materials such as steel and glass – that is, why does glass break so easily and steel does not?

In the 1920s, a British engineer called A.A. Griffith explained for the first time why different materials have such vastly different strengths. To calculate the theoretical maximum strength of a material, we need to use the concept of strain energy. When we stretch a rod by 1 mm using a force of 1,000 N, the 1 J of energy we exerted (0.001 m times 1,000 N) is stored within the material as strain energy because individual atomic bonds are essentially stretched like mechanical springs. Written in terms of stresses and strains, the strain energy stored within a unit volume of material is simply half the product of stress and strain:

Griffith’s brilliant insight was to equate the strain energy stored in the material just before fracture to the surface energy of the two new surfaces created upon fracture.

Surface energy??

It is probably not immediately obvious why a surface would possess energy. But from watching insects walk over water we can observe that liquids must possess some form of surface tension that stops the insect from breaking through the surface. When the surface of a liquid is extended, say by inflating a soap bubble, work is done against this surface tension and energy is stored within the surface. Similarly, when an insect is perched on the surface of a pond, its legs form small dimples on the surface of the water and this deformation causes an increase in the surface energy. In fact, we can calculate how far the insect sinks into the surface by equating the increase in surface energy to the decrease in gravitational potential energy as the insect sinks. Furthermore, liquids tend to minimise their surface energy under the geometrical and thermodynamic constraints placed upon them, and this is precisely why raindrops are spherical and not cubic.

When a liquid freezes into a solid, the underlying molecular structure changes, but the overall surface energy remains largely the same. Because the molecular bonds in solids are so much stronger than those in liquids, we can’t actually see the effect of surface tension in solids (an insect landing on a block of ice will not visibly dimple the external surface). Nevertheless, the physical concept of surface energy is still valid for solids.

So, back to our fracture problem. What we want to calculate is the stress which will separate two adjacent rows of molecules within a material. If the rows of molecules are initially metres apart then a stress

causing a strain

will lead to the following strain energy per square metre

From Hooke’s law we know that

and therefore replacing in the first equation we have

Now, if the surface energy per square metre of the solid is equal to , then the separation of the two rows of molecules will lead to an increase in surface energy of

(two new surfaces are created). By assuming that all of the strain energy is converted to surface energy:

There is typically a considerable amount of plastic deformation in the material before the atomic bonds rupture. This means that the Young’s modulus decreases once the plastic regime is reached and the strain energy is roughly half of the ideal elastic case. Hence, we can simply drop the 2 in front of the square root above to get a simple, yet approximate, expression for the strength of a material

As the values of and

vary from material to material, the theoretical strengths will be different as well. The surface tension of a material is roughly proportional to the Young’s modulus because the same chemical bonds give rise to both these properties. In fact, the relationship between surface energy and Young’s modulus can be approximated as

such that the strength of a material is approximately proportional to the Young’s modulus by the following relation

Given, the relationship between stress and strain we can conclude that the theoretical failure strain of most materials ought to be, approximately,

or 20% for basically all materials.

In everyday practise, most materials have failure strengths far beneath the theoretical maximum and also vary widely in their failure strains. To explain why, Griffith conducted some simple experiments on glass. After calculating the Young’s modulus from a simple tensile test and assuming a molecular spacing of

Angstroms, Griffith arrived at a theoretical strength for glass of 14,000 MPa. Griffith then tested a number of 1 mm diameter glass rods in tension and found the strength to be on average around 170 MPa, i.e.

th of the theoretical value.

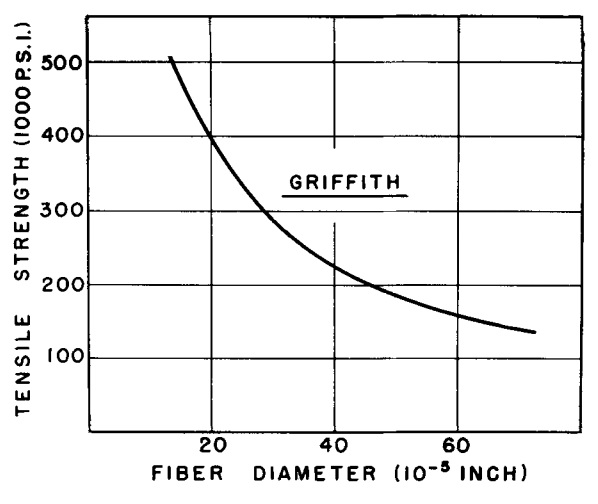

The pultrusion process used to create the glass rods allowed Griffith to pull thinner and thinner rods, and as the diameter decreased, the failure stress of the rods started to increase – slowly at first, but then very rapidly. Glass fibres of 2.5 m in diameter showed strengths of 6,000 MPa when newly drawn, but dropped to about half that after a few hours. Griffith was not able to manufacture smaller rods so he fitted a curve to his experimental data and extrapolated to much smaller diameters. And lo and behold, the exponential curve converged to a failure strength of 11,000 MPa – much closer to the 14,000 MPa predicted by his theory.

Variation of tensile strength with fibre diameter. From W.H. Otto (1955). Relationship of Tensile Strength of Glass Fibers to Diameter. Journal of the American Ceramic Society 38(3): 122-124.

Griffith’s next goal was to explain why the strength of thicker glass rods fell so far below the theoretical value. Griffith surmised that as the volume of a specimen increases, some form of weakening mechanisms must be active because the underlying chemical structure of the material remains the same. This weakening mechanism must somehow lead to an increase in the actual stress around a future failure site and act as a stress concentration. Luckily, the idea of stress concentrations had previously been introduced in the naval industry, where the weakening effects of hatchways and other openings in the hull had to be accounted for. Griffith decided that he would apply the same concept at a much smaller scale and consider the effects of molecular “openings” in a series of chemical bonds.

The idea of a stress concentration is quite simple. Any hole or sharp notch in a material causes an increase in the local stress around the feature. Rather counter-intuitively, the increase in local stress is solely a function of the shape of the notch and not of its size. A tiny hole will weaken the material just as much as a large one will. This means a shallow cut in a branch will lower the load-carrying capacity just as well as a deep one – it is the sharpness of the cut that increases the stress.

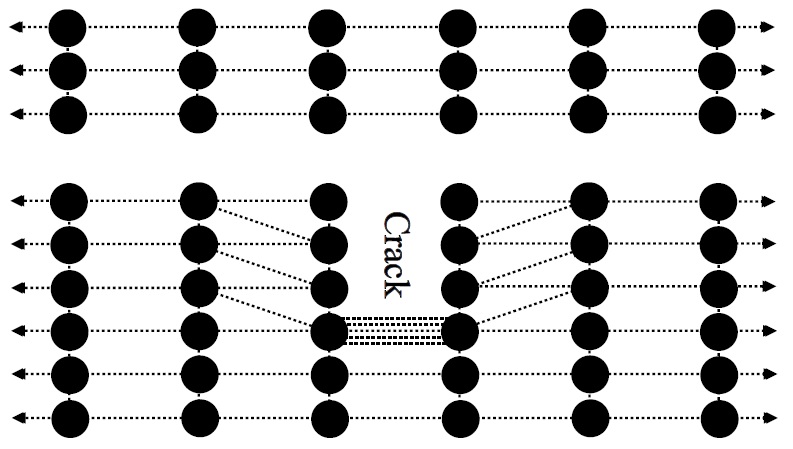

We can visualise quite easily what must happen at a molecular scale when we introduce a notch in a series of molecules. A single strand of molecules must reach the maximum theoretical strength. Similarly, placing a number of such strands side by side should not effect the strength. However, if we cut a number of adjacent strands at a specific location perpendicular to the loading direction, then the flow of stress from molecule to molecule has been interrupted and the load in the material has to be redistributed to somewhere else. Naturally, the extra load simply goes around the notch and will therefore have to pass through the first intact bond. As a result, this bond will fail much earlier than any of the other bonds as the stress is concentrated in this single bond. As this overloaded bond breaks, the situation becomes slightly worse because the next bond down the line has to carry the extra load of all the broken bonds.

The stress concentration factor of a notch of half-length and radius of curvature at the crack tip

is given by

If we now consider a crack about 2 m long and 1 Angstrom tip radius, this produces a stress concentration factor of

and therefore this would lower the theoretical strength of glass from 14,000 MPa to around 70 MPa, which is very close to the average strength of typical domestic glass.

As a result, Griffith made the conjecture that glass and all other materials are full of tiny little cracks that are too small to be seen but nevertheless significantly reduce the theoretical maximum strength. Griffith did not give an explanation for why these cracks appeared in the first place or why they were rarer for thinner glass rods. As it turns out, Griffith was correct about the mechanism of stress concentrationa, but wrong about their origins.

It took quite some time until a more satisfactory explanation was provided, dispelling the notion that the reduction in strength could be attributed to inherent defects within the material. After WWII, experiments showed that even thick glass rods could approach the theoretical upper limit of strength when carefully manufactured. It was also noticed that stronger fibres would weaken over time, probably as a result of handling, and that weakened fibres could consequently be strengthened again by chemically removing the top surface. By depositing sodium vapour on the external surface of glass, the density of cracks could be visualised and was found to be inversely proportional to the strength of the glass – the more cracks, the lower the strength, and vice versa.

These cracks are a simple result of scratching when the exterior surface comes in contact with other objects. Larger pieces of glass are more likely to develop surface cracks due to the larger surface area. Furthermore, thin glass fibres are much more likely to bend when in contact with other objects, and are therefore less likely to scratch. This means that there is nothing special about thin fibres of glass – if the surface of a thick fibre can be kept just as smooth as that of a thin fibre then it will be just as strong.

This means that an airplane cast from one piece of 100% pristine glass could theoretically sustain all flight loads, such an idea ludicrous in reality, because the likelihood of inducing surface cracks during service is basically 100%.

At this point you might be asking, what is different about metals – why are they used on aircraft instead?

The difference boils down to differences between the atomic structure of glasses and metals. When liquids freeze they typically crystallise into a densely packed array and form a solid that is denser than the liquid. Glasses on the other hand do not arrange themselves into a nicely packed crystalline structure but rather cool into a purely solidified liquid. Glasses can crystallise under some circumstances under a process known as devitrification, but the glass is often weakened as a result. When a solid crystallises, it can deform via a new process in which it starts to flow in shear just like Plasticine or moulding clay does when it is formed.

There is no clear demarcation line between a brittle (think glass) and ductile (think metal) material. The general rule of thumb is that a brittle material does not visibly deform before failure and failure is caused by a single crack that runs smoothly through the entire material. This is why it’s often possible to glue a broken vase back together.

In ductile materials, there is permanent plastic deformation before ultimate failure and so these materials behave more like moulding clay. Before a ductile material, like mild steel, finally snaps in two, there is considerable plastic deformation which can be imagined along the lines of flowing honey or treacle. This plastic flowing is caused by individual layers of atoms sliding over each other, rather than coming apart directly. As this shearing of atomic bonds takes place, the material is not significantly weakened because the atomic bonds have the ability to re-order, and the material may even be strengthened by a process known as cold working (atomic bonds align with the direction of the applied load). The amount of shearing before final failure depends largely on the type of metal alloy and always increases as a metal is heated; hence a blacksmith heats metal before shaping it.

Generally, these two fracture mechanism, brittle cracking and plastic flowing, are always competing in a solid. The material will break in whatever mechanism is weakest; yield before cracking if it is ductile or crack directly if it is brittle.

From Wright Flyer to Space Shuttle

On December 17 1903, the bicycle mechanic Orville Wright completed the first successful flight in a heavier-than-air machine. A flight that lasted a mere 12 seconds, reaching an altitude of 10 feet and landing 120 feet from the starting point. The Wright Flyer was made of wood and canvas, powered by a 12 horsepower internal combustion engine and endowed with the first, yet basic, mechanisms for controlling pitch, yaw and roll. Only 66 years later, Neil Armstrong walked on the moon, and another 12 years later the first partially re-usable space transportation system, the Space Shuttle, made its way into orbit.

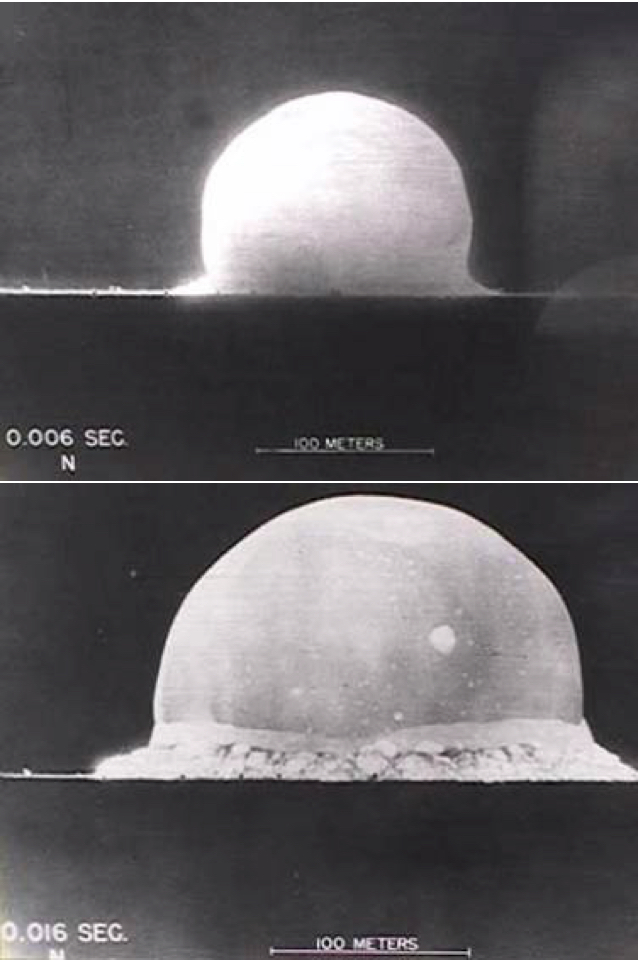

Even though the means of providing lift and attitude control in the Wright Flyer and the Space Shuttle were nearly identical, the operational conditions could not be more different. The Space Shuttle re-entered the atmosphere at orbital velocity of 8 km/s (28x the speed of sound), which meant that the Shuttle literally collided with the atmosphere, creating a hypersonic shock wave with gas temperatures close to 12,000°C -temperature levels hotter than the surface of the sun. How was such unprecedented progress – from Wright Flyer to Space Shuttle – possible in a mere 78 years? This blog post chronicles this technological evolution by telling the story of five iconic aircraft.

The Wright brothers were the first to succesfully fly what we now consider a modern airplane, but as the brothers would adamantly confirm, they did not invent the airplane. Rather, the brothers stood on the shoulders of a century-old keen interest in aeronautical research. The story of the modern airplane goes back to about 100 years before the Wright brothers, to a relatively unknown British scientist, philosopher, engineer and member of parliament, Sir George Cayley. Although Leonardo da Vinci had thought up flying machines 300 years prior to this, his inventions have relatively little in common with modern designs. In 1799 Cayley proposed the first three-part concept that, to this day, represent the fundamental operating principles of flying:

- A fixed wing for creating lift.

- A separate mechanism using paddles to provide propulsion.

- And a cruciform tail for horizontal and vertical stability.

Many of the flying enthusiasts of the 18th century based their designs on the biomimicry of birds, combining lift, propulsive and control functions in a single oversized wing contraption that was insufficient at providing lift, forward propulsion, let alone a means of control. During a decade of intensive study of the aerodynamics of birds and fish from 1799-1810, Cayley constructed a series of rotating airfield apparatuses that tested the lift and drag of different airfoil shapes. In 1852, Cayley published his most famous work “Sir George Cayley’s Governable Parachutes”, which detailed the blueprint of a large glider with almost all of the features we take for granted on a modern aircraft. A prototype of this glider was built in 1853 and flown by Cayley’s coachman, accelerating the prototype off the rooftop of Cayley’s house in Yorkshire.

The distinctive characteristic of the Wright brothers was their incessant persistence and never-ending scepticism of the research conducted by scientists of authority. By single-handedly revising the historic textbook data on airfoils and building all of their inventions themselves, they developed into the most experienced aeronautical engineers of their day. Engineering often requires a certain intuitive knowledge of what works and what doesn’t, typically acquired through first-hand experience, and the Wright brothers had developed this knack in abundance. In this sense, they were best-equipped to refine the concepts of their peers and develop them into something that superseded everything that came before.

One of the most potent signals of British defiance in WWII is the Supermarine Spitfire. In the summer of 1940, during the Battle of Britain, the Spitfire presented the last bulwark between tyranny and democracy. Between July and October 1940, 747 Spitfires were built of which 361 were destroyed and 352 were damaged. Just 34 Spitfires that were built during the summer of 1940 made it through the war unscathed. Unsurprisingly, the Spitfire is one of the most famous airplanes of all time and its aerodynamic beauty of elliptical wings and narrow body make it one of the most iconic aircraft ever built.

The Spitfire was designed by the chief engineer of Supermarine, RJ Mitchell. Before WWII Mitchell led the construction of a series of sea-landing planes that won the Schneider Trophy three times in a row in 1927, 1929 and 1931. The Schneider Trophy was the most important aviation competition between WWI and WWII – initially intended to promote technical advances in civil aviation, it quickly morphed into pure speed contest over a triangular course of around 300 km. As competitions so often do, the Schneider Trophy became an impetus for advancing aeroplane technology, particularly in aerodynamics and engine design. In this regard the Schneider Trophy had a direct impact on many of the best fighters of WWII. The low drag profile and liquid-cooled engine which were pioneered during the Schneider Trophy were all features of the Supermarine Spitfire and the Mustang P-51. The winning airplane in 1931 was the Supermarine S6.B, setting a new airspeed record of 655.8 km/h (407.4 mph). The S6.B was powered by the supercharged Rolls-Royce R engine with 1900 bhp, which presented such insurmountable problems with cooling that surface radiators had to be attached to the buoyancy floats used to land on water. In March 1936, Mitchell evolved the S6.B into the Spitfire with a new Rolls Royce Merlin engine. The Spitfire also featured its radical elliptical wing design which promised to minimise lift-induced drag. Theoretically, an infinitely long wing of constant chord and airfoil section produces no induced drag. A rectangular wing of finite length however produces very strong wingtip vortices and as a result almost all modern wings are tapered towards the tips or fitted with wing tip devices. The advantage of an elliptical planform (tapered but with curved leading and trailing edges) over a tapered trapezoidal planform is that the effective angle of attack of the wing can be kept constant along the entire wingspan. Elliptical wings are probably a remnant of the past as they are much more difficult to manufacture and the benefit over a trapezoidal wing is negligible for the long wing spans of commercial jumbo jets. However, the design will forever live on in one of the most iconic fighters of all time, the Supermarine Spitfire.

Captain Chuck Yeager, an American WWII fighter ace, became the first supersonic pilot in 1947 when the chief test pilot for the Bell Corporation refused to fly the rocket-powered Bell X-1 experimental aircraft without any additional danger pay. The X-1 closely resembled a large bullet with short stubby wings for higher structural efficiency and less drag at higher speeds. The X-1 was strapped to the belly of a B-29 bomber and then dropped at 20,000 feet, at which point Yeager fired his rocket motors propelling the aircraft to Mach 0.85 as it climbed to 40,000 feet. Here Yeager fully opened the throttle, pushing the aircraft into a flow regime for which there was no available wind tunnel data, ultimately reaching a new airspeed record of Mach 1.06. Yeager had just achieved something that had eluded Europe’s aircraft engineers through all of WWII.

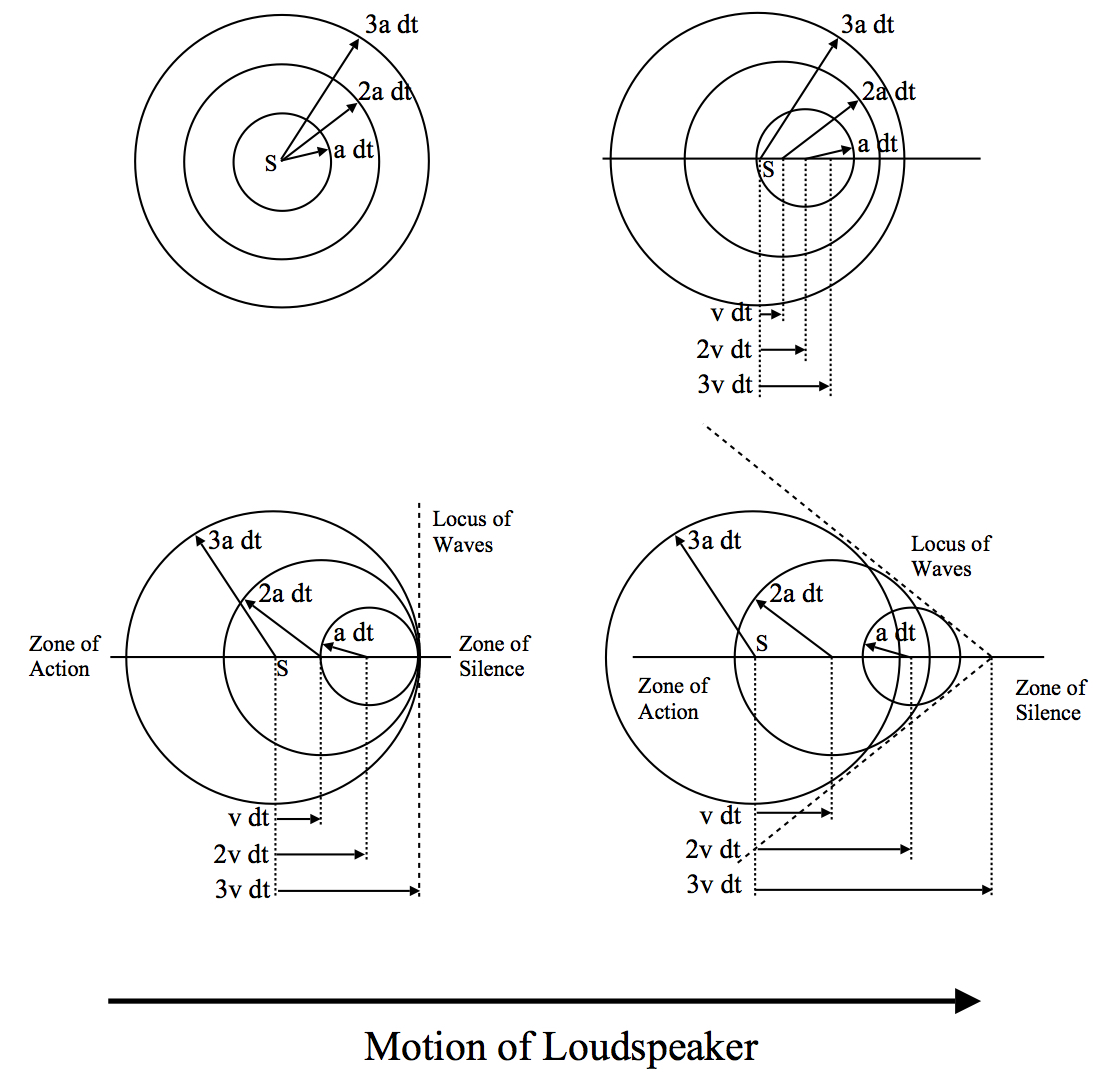

The limit that the European aircraft designer ran into during the air speed competitions prior to WWII was the sound barrier. The problem of flying faster, or in fact approaching the speed of sound, is that shock waves start to form at certain locations over the aircraft fuselage. A shock wave is a thin front (about 10 micrometers thick) in which molecules are squashed together by such a degree that it is energetically favourable to induce a sudden increase in the fluid’s density, temperature and pressure. As an aircraft approaches the speed of sound, small pockets of sonic or supersonic flow develop on the top surface of the wing due to airflow acceleration over the curved upper skin. These supersonic pockets terminate in a shockwave, drastically slowing the airflow and increasing the fluid pressure. Even in the absence of shock waves the airflow runs into an adverse pressure gradient towards the trailing edge of the wing, slowing the airflow and threatening to separate the boundary layer from the wing. This condition drastically increases the induced drag and reduces lift, which in the worst case can lead to aerodynamic stall. In the presence of a shock wave this scenario is exacerbated by the sudden increase in pressure and drop in airflow velocity across the shock wave. For this precise reason, commercial aircraft are limited to speeds of around Mach 0.87-0.88 as any further increase in speed would induce shock waves over the wings, increasing drag and requiring an unproportional amount of additional engine power.

It was precisely this problem that aircraft designers ran into in the 1930’s and 1940’s. To make their airplanes approach the speed of sound they needed incredible amounts of extra power, which the internal combustion engines of the time could not provide. Quite fittingly this seemingly insurmountable speed limit was dubbed the sound barrier. It was not until the advent of refined jet engines after WWII that the sound barrier was broken. However, exceeding the sound barrier does not mean things get any easier. The ratio of upstream to downstream airflow speed and pressure across a shock wave are simple functions of the upstream Mach number (airspeed / local speed of sound). Unfortunately for aircraft designers, these ratios change with the square of the upstream Mach number, which means that the induced drag becomes worse and worse the further the speed of sound is exceeded. This is why the Concorde needed such powerful engines and why its fuel costs were so exorbitant.

The North American X-15 rocket plane was one of NASA’s most daring experimental aircraft intended to test flight conditions at hypersonic speeds (Mach 5+) at the edge of space. Three X-15s made 199 flights from 1960-1968 and the data collected and knowledge gained directly impacted the design of the Space Shuttle. Initially designed for speeds up to Mach 6 and altitudes up to 250,000 feet, the X-15 ultimately reached a top speed of Mach 6.72 (more than one mile a second) and a maximum altitude of 354,200 feet (beyond the official demarcation line of space). As of this writing, the X-15 still holds the world record for the highest speed recorded by a manned aircraft. Given the awesome power required to overcome the induced drag of flying at these velocities, it is no surprise that the X-15 was not powered by a traditional turbojet engine but rather a full-fledged liquid-propellant rocket engine, gulping down 2,000 pounds of ethyl alcohol and liquid oxygen every 10 seconds.

The X-15 was dropped from a converted B-52 bomber and then made its way on one of two different experimental flight profiles. High-speed flights were conducted at an altitude of a typical commercial jetliner (below 100,000 feet) using conventional aerodynamic control surfaces. For high-altitude flights the X-15 initiated a steep climb at full throttle, followed by engine shut-down once the aircraft left Earth’s atmosphere. What followed was a ballistic coast, carrying the aircraft up to the peak of an arc and then plummeting back to Earth. Beyond Earth’s atmosphere the aerodynamic control surfaces of the X-15 were obviously useless, and so the X-15 relied on small rocket thrusters for control.

The hypersonic speeds beyond the conventional sound barrier discussed previously created a new problem for the X-15. In any medium, sound is transmitted by vibrations of the medium’s molecules. As an aircraft slices through the air, it disturbs the molecules around it which ensues in a pressure wave as molecules bump into adjacent molecules, sequentially passing on the disturbance. Flying faster than the speed of sound means that the aircraft is moving faster than this pressure wave. Put another way, the air molecules are transmitting the information of the disturbance created by the aircraft via a pressure wave that travels at the speed of sound. While the aircraft is creating new disturbances further upstream, Nature can’t keep up with the aircraft. At hypersonic speeds the aircraft is literally smashing into the surrounding stationary air molecules, and the ensuing compression of the air around the aircraft skin leads to fluid temperatures that are above the melting point of steel. Hence, one of the major challenges of the X-15 was guaranteeing structural integrity at these incredibly high temperatures. As a result, the X-15 was constructed from Inconel X, a high-temperature nickel alloy, which is also used in the very hot turbine stages of a jet-engine.

The wedge tail visible at the back of the aircraft was also specifically required to guarantee attitude stability of the aircraft at hypersonic speeds. At lower speeds this thick wedge created considerable amounts of drag, in fact as much as some individual fighter aircraft alone. The area of the tail wedge was around 60% of the entire wing area and additional side panels could be extended out to further increase the overall surface area.

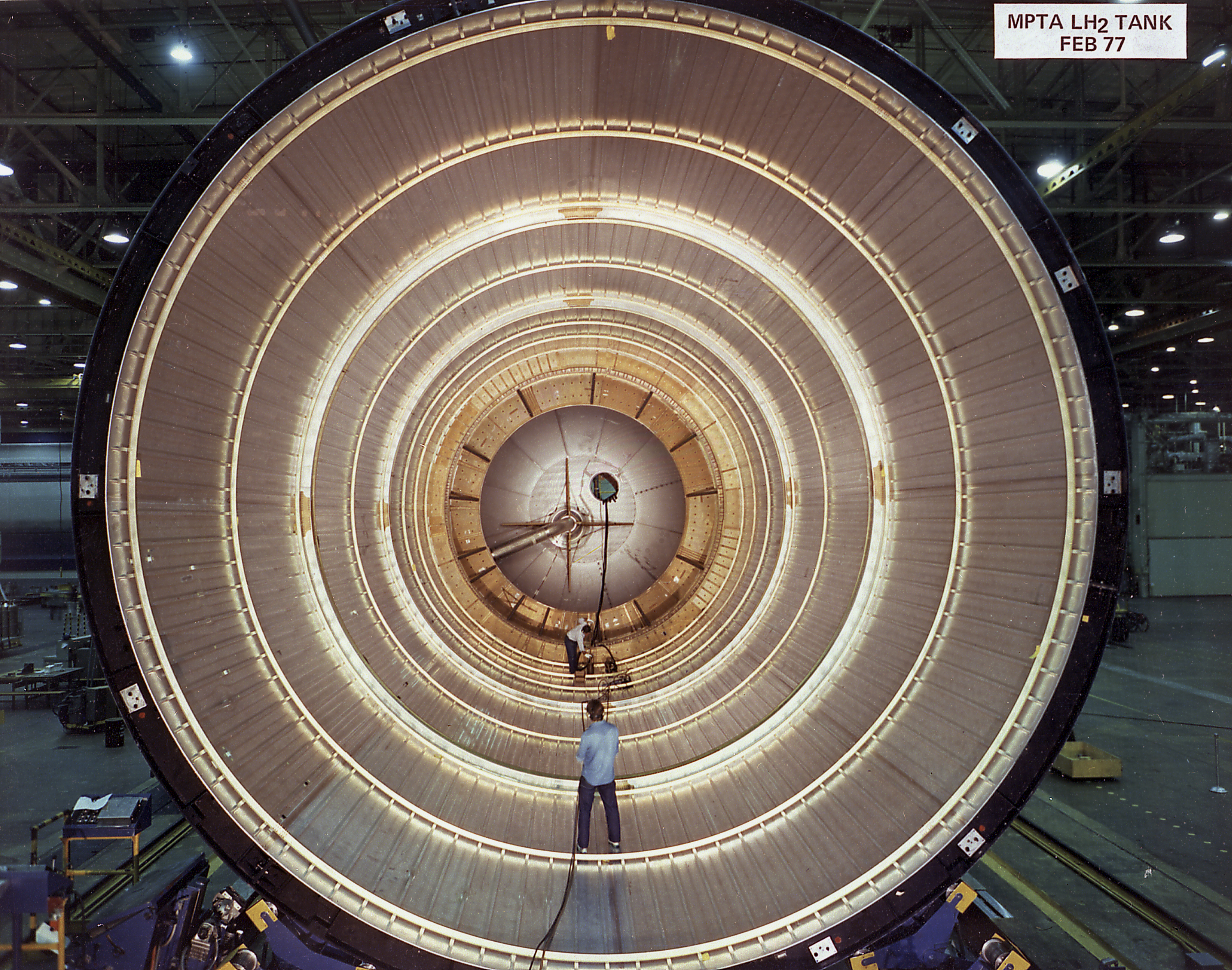

12 April 1981 marked a new era in manned spaceflight: Space Shuttle Columbia lifted off for the first time from Cape Canaveral. The Shuttle capped an incredible fruitful period in aerospace engineering development. The ground work laid by the original Wright flyer, the Spitfire, the X-1 and the X-15 is all part of the technological arc that led to the Shuttle. The fundamentals didn’t change but their orders of magnitude did.

“Like bolting a butterfly onto a bullet” — Story Musgrave, Columbia astronaut, 1996

Story Musgrave’s description of the Space Shuttle is not far off the mark. On the launch pad the Shuttle sat on two solid-rocket boosters producing 37 million horsepower, accelerating the Shuttle beyond the speed of sound in about 30 seconds. Eight minutes and 500,000 gallons of fuel later the Shuttle was travelling at 17,500 mph at the edge of space. The Space Shuttle was not only powerful but possessed a grace that the Wright brothers would have appreciated. After smashing through the atmosphere upon reentry at Mach 28 (8 km/s) the piloting astronaut had to slow the Shuttle down to 200 mph via a series of gliding twists and turns, using the surrounding air as an aerodynamic break.

The ultimate mission of the Shuttle was to serve as a cost-effective means of travelling to space for professional astronauts and civilians. That vision never came to fruition due to the high maintenance costs between flights, and partly due the Challenger and Columbia disasters that shattered all hopes that space travel would become routine.

Perhaps the Space Shuttle is one of humanities greatest inventions because it reminds us that for all its power, grace and genius it is still the brainchild of fallible men.

Edits:

A previous version of this article incorrectly stated that the Space Shuttle featured three solid rocket boosters (SRBs). Of course, the Space Shuttle only featured two.

After Germany and its allies lost WWI, motor flying became strictly prohibited under the Treaty of Versailles. Creativity often springs from constraints, and so, paradoxically, the ban imposed by the Allies encouraged precisely what they had actually wanted to thwart: the growth of the German aviation industry. As all military flying was prohibited under the Treaty, the innovation in German aviation throughout the 1920’s took an unlikely path via unmotorised gliders built by student associations at universities.

Before and during WWI, Germany had been one of the leading countries in terms of the theoretical development of aviation and the actual construction of novel aircraft. The famous aerodynamicist Ludwig Prandtl and his colleagues developed the theory of the boundary layer which later led to wing theory. The close relationship of research laboratories and industrial magnates, like Fokker and Junkers, meant that many of the novel ideas of the day were tested on actual aircraft during WWI. Part of the reason why Baron von Richthofen, the Red Baron, became the most decorated fighter pilot of his day, was because his equipment was more technologically advanced than that of his opponents; a direct result of a thicker cambered wing that Prandtl had tested in his wind tunnels.

Given this heritage, it comes to no surprise that German students and professors soon found a way around the ban imposed at the Treaty of Versailles. For example, a number of enthusiastic students from the University of Aachen formed the Flugwissenschaftliche Vereinigung Aachen (FVA, Aachen Association for Aeronautical Sciences). These students loved the art and science of flying and intended to continue their passion despite the ban. Theodore von Kármán, of vortex street and Tacoma Narrows bridge fame, was a professor at the Technical University of Aachen at the time and remembers the episode as follows:

One day an FVA member approached me with a bright idea.

“Herr Professor,“ he said. “We would like your help. We wish to build a glider.”

“A glider? Why do you wish to build a glider?”

“For sport.” the student said.

I thought it over. Constructing a glider would be more than sport. It would be an interesting and useful aerodynamic project, quite in keeping with German traditions, but in view of postwar turmoil it could be politically quite risky … On the other hand, motorised flight was specifically outlawed in the Treaty of Versailles, and sport flying was not military flying. So rationalizing in this way, I told the boys to go ahead.

What von Kármán was not aware of at the time was that he was helping to lay the foundation for an important part of the German air force during WWII. The lessons learned in improving glider design would be directly applicable to military aeronautics later on.

Glider development in itself is a topic worth studying. The French sailor Le Bris constructed a functional glider in 1870, but the most famous gliders of the 19th century were all built by Otto Lilienthal. Lilienthal became the first aviator to realise the superiority of curved wings over flat surfaces for providing lift. Lilienthal conducted some rudimentary wing testing to tabulate the air pressure and lift for different wing sections; data which inspired, but was then superseded by the Wright brothers’ experiments using their own wind tunnel. In the USA, Octave Chanute is famous for his work on gliders and for many years he served as a direct mentor to the Wright brothers, who themselves built a number of successful gliders to optimise wing shapes and control mechanisms.

After the first successful motor-powered flight in 1903, interest in gliders largely subsided, but was then revived by collegiate sporting competitions organised by German universities. Oskar Ursinus, the editor of the aeronautics journal Flugsport (Sport Flying), organised an intercollegiate gliding competition in the Rhön mountains, a spot renowned for its strong upwinds. So work began behind closed doors in many university labs and sheds. Von Kármán’s school, the University of Aachen, built a 6 m (20 foot) wing-span glider called the Black Devil, which was the first cantilever monoplane glider to be built at the time. As a result of the cantilever wing construction, the design abandoned any form of wire bracing to stabilise the wing and relied purely on internal wing bracing, as had been pioneered by Junkers in 1915. In this regard, the glider was already more advanced than most of the fighters in WWI that were based on the classical bi-plane or even trip-plane design held together by wires and struts.

By early 1920 the Black Devil was ready to compete. At this point the students faced a new logistical challenge — how were they going to transport the glider a 150 miles south through three military zones (British, French and American), when shipping aircraft components was strictly forbidden?

As reckless students they of course operated in secret. The Black Devil was dismantled into its components, packed into a tarpaulin freight car and then driven through the night. Of this episode von Kármán recounts that,

On one occasion during the journey we almost lost the Black Devil to a contingent of Allied troops. Fortunately the engineer and student guard received advance notice of the movement, disengaged the car holding the glider, and silently transferred it to a dark sliding until the troops rode past.

Overall, the trip took six hours and the teams from Stuttgart, Göttingen and Berlin were already in attendance.

Lacking any motorised aircraft to launch the gliders, two rubber ropes were attached to the nose of the glider and then used as a catapult to launch the glider off the edge of a hill. Once in the air, it was the role of the pilot to manoeuvre the plane purely by shifting his/her body weight to balance the glider in the wind. In 1920, Aachen managed to win the competition with a flight time of 2 minutes and 20 seconds. Not a new revolution in glider design, but proving the aerodynamics of their concept plane nevertheless. A year later, an improved version of the Black Devil, the Blue Mouse, flew for 13 minutes, breaking the long-held record by Orville Wright of 9 minutes and 45 seconds. Some great videos of the early flights at the Wasserkuppe in the Rhön mountains exist to this day.

In the following years, von Kármán and his scientific mentor and aerodynamics pioneer Ludwig Prandtl gave a series of seminars on the aerodynamics of gliding, which were attended by students and flying enthusiasts from all over the country. Among the attendees was Willy Messerschmitt, an engineering student at the time, whose fighters and bombers later formed the core of the Nazi air force during WWII. Even established industrialists, German royalty and other university professors attended the talks. As a result of this broad and democratic dissemination of knowledge and the collaborative spirit at the time, innovations began to sprout quickly. One of the main innovations was efficiently using thermal updrafts in combination with topological updrafts to extend the flying time. In 1922, a collaborative design team from the University of Hannover built the Hannover H 1 Vampyre glider, which first extended the gliding record to 3 hours and then to 6 hours in 1923. The Vampyr was one of the first heavier-than-air aircraft to use the stressed-skin “monocoque” design philosophy and is the forerunner of all modern gliders.

The collegiate sporting competitions continued until the early 1930’s. The Nazis soon realised that the technical knowledge gained in these sporting competitions could be used in rebuilding the German air force. Due to the lack of a power unit and limited control surfaces, the student engineers and industrialists had been forced to design efficient lightweight structures and wings that provided the best compromise in terms of lift, drag and attitude control. Most importantly, the gliders proved the superiority of single long cantilevered wings over the limited double- and triple-wing configuration used during WWI. The internal structure of the wing allowed for much lighter construction as the size of the aircraft grew, the parasitic source of drag induced by the wires and struts was eliminated, and the lift to drag ratio was dramatically improved by the long glider wings. Tragically, some pioneers took these concept too far and lost their lives as a result. While the lift efficiency of a wing is increased as the aspect ratio (length to chord ratio) increases, so do the bending stresses at the root of the wing due to lift. As a result, there were a number of incidents where insufficiently stiffened wings literally twisted off the fuselage.On the importance of glider developments von Kármán reflects that,

I have always thought that the Allies were shortsighted when they banned motor flying in Germany … Experiments with gliders in sport sharpened German thinking in aerodynamics, structural design, and meteorology … In structural design gliders showed how best to distribute weight in a light structure and revealed new facts about vibration. In meteorology we learned from gliders how planes could use the jet stream to increase speed; we uncovered the dangers of hidden turbulence in the air, and in general opened up the study of meteorological influences on aviation. It is interesting to note that glider flying did more to advance the science of aviation than most of the motorised flying in World War I.

We can only speculate how von Kármán must have felt after leaving Germany in the 1930’s, partly due to his Jewish heritage, and witness from afar how the machines he helped to develop wreaked havoc in Europe during WWII.

References

The quotes in this post are taken from von Kármán’s excellent biography The Wind and Beyond: Theodore von Karman, Pioneer in Aviation and Pathfinder in Space by Theodore von Kármán and Lee Edson.

On November 8, 1940 newspapers across America opened with the headline “TACOMA NARROWS BRIDGE COLLAPSES”. The headline caught the eye of a prominent engineering professor who, from reading the news story, intuitively realised that a specific aerodynamical phenomenon must have led to the collapse. He was correct, and became publicly famous for what is now known as the von Kármán vortex street.

Theodore von Kármán was one of the most pre-eminent aeronautical engineers of the 20th century. Born and raised in Budapest, Hungary he was a member of a club of 20th century Hungarian scientists, including mathematician and computer scientist John von Neumann and nuclear physicist Edward Teller, who made groundbreaking strides in their respective fields. Von Kármán was a PhD student of Ludwig Prandtl at the University of Göttingen, the leading aerodynamics institute in the world at the time and home to many great German scientists and mathematicians.

Although brilliant at mathematics from an early age, von Kármán preferred to boil complex equations down to their essentials, attempting to find simple solutions that would provide the most intuitive physical insight. At the same time, he was a big proponent of using practical experiments to tease out novel phenomena that could then be explained using straightforward mathematics. During WWI he took a leave of absence from his role as professor of aeronautics at the University of Aachen to fulfil his military duties, overseeing the operations of a military research facility in Austria. In this role he developed a helicopter that was to replace hot-air balloons for surveillance of battlefields. Due to his combined expertise in aerodynamics and structural design he became a consultant to the Junkers aircraft and Zeppelin airship companies, helping to design the first all-metal cantilevered wing aircraft, the Junker J-1, and the Zeppelin Los Angeles.Furthermore, von Kármán developed an unusual expertise in building wind tunnels — a suitable had not originally exist when he first started his professorship in Aachen and was desperately needed for his research. As a result, he became a sought after expert in designing and overseeing the construction of wind tunnels in the USA and Japan. Von Kármán’s broad skill set and unique combination of theoretical and experimental expertise soon placed him on the radar of physicist Robert Millikan who was setting up a new technical university in Pasadena, California, the California Institute of Technology. Millikan believed that the year-round temperate climate would attract all of the major aircraft companies of the bourgeoning aerospace industry to Southern California, and he hired von Kármán to head CalTech’s aerospace institute. Millikan’s wager paid off when companies such as Northrup, Lockheed, Douglas and Consolidated Aircraft (later Convair) all settled in the greater Los Angeles area. Von Kármán thus became a consultant on iconic aircraft such as the Douglas DC-3, the Northrup Flying Wing, and later the rockets developed by NACA (now NASA).

Von Kármán is renowned for many concepts in structural mechanics and aerodynamics, e.g. the non-linear behaviour of cylinder buckling and a mathematical theory describing turbulent boundary layers. His most well-known piece of work, the von Kármán vortex street, tragically, reached public notoriety after it explained the collapse of a suspension bridge over the Puget Sound in 1940.

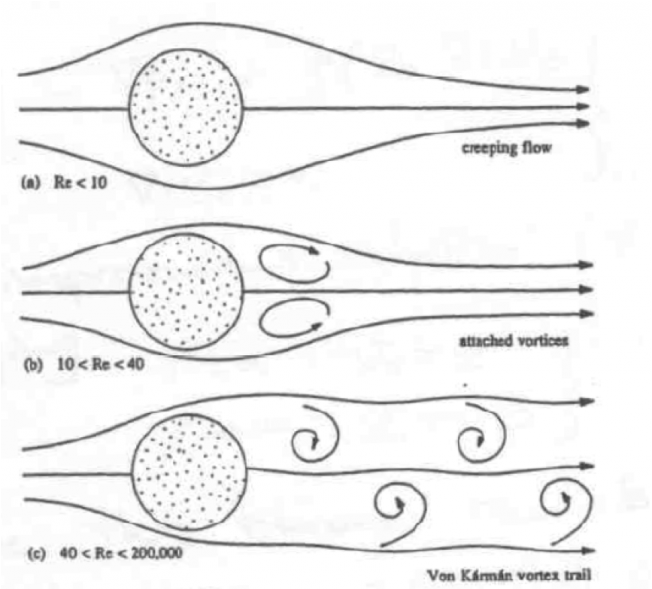

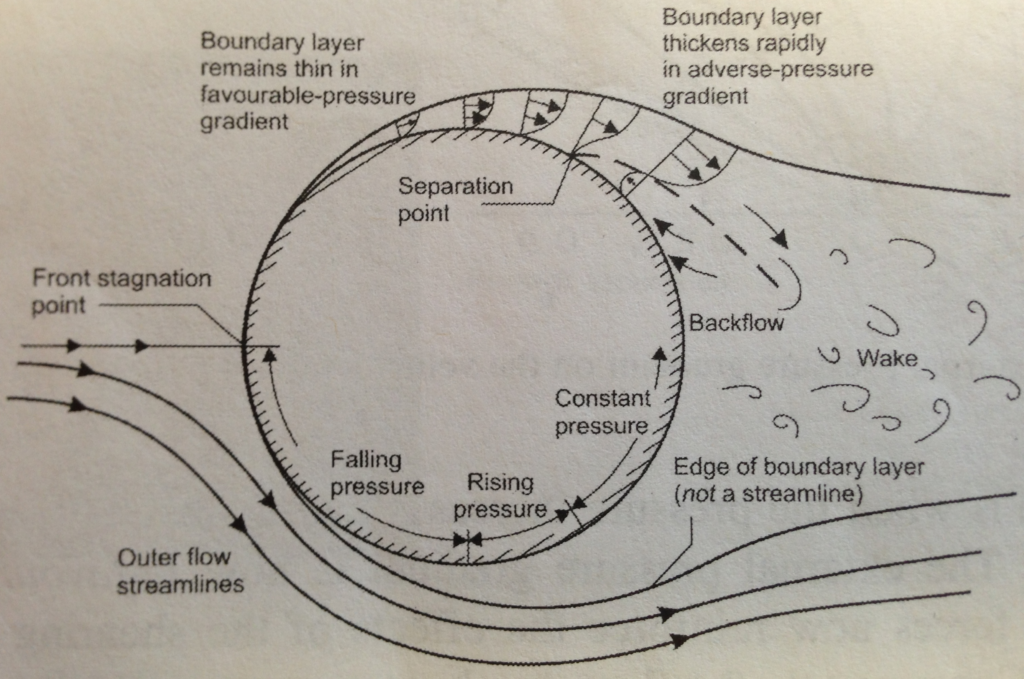

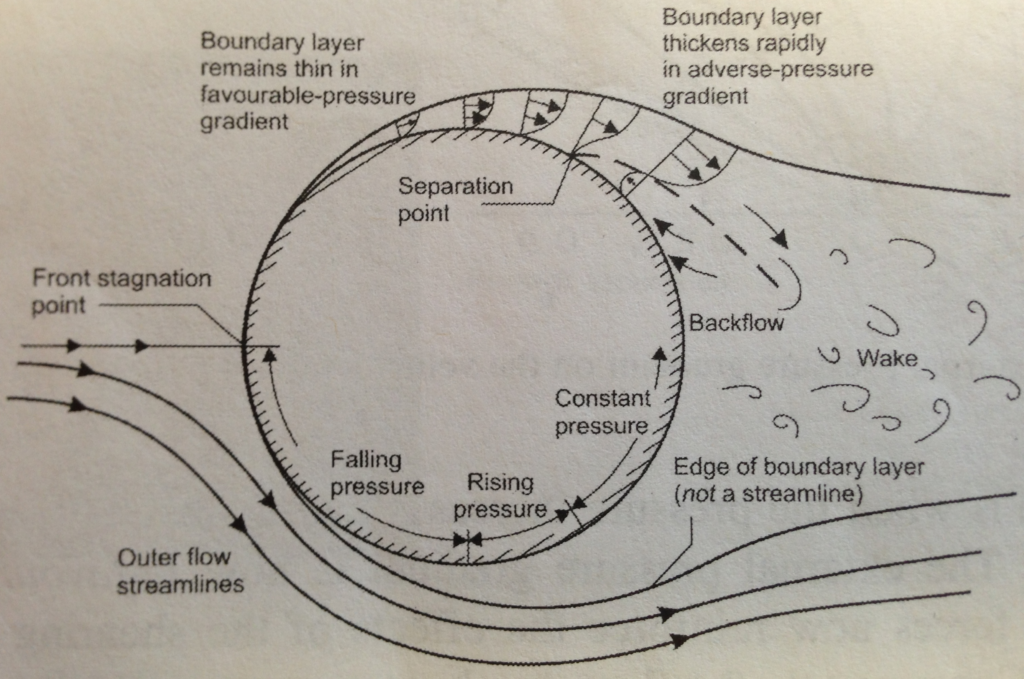

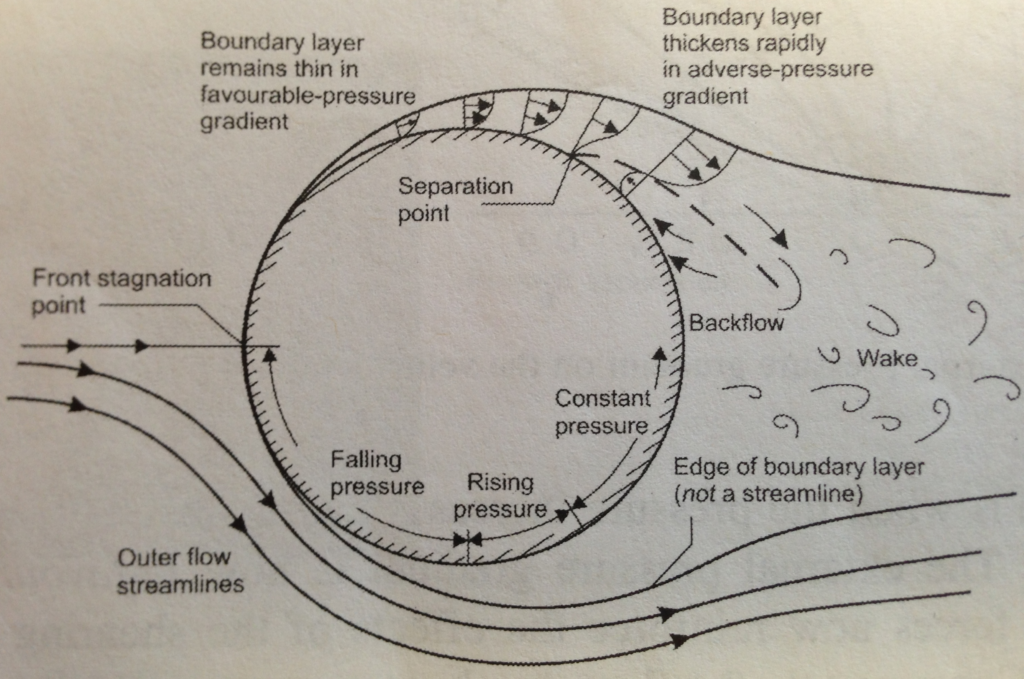

The von Kármán vortex street is a direct result of boundary layer separation over bluff bodies. Immersed in fluid flow, any body of finite thickness will force the surrounding fluid to flow in curved streamlines around it. Towards the leading edge this causes the flow to speed up in order to balance the centripetal forces created by the curved streamlines. This creates a region of falling fluid pressure, also called a favourable pressure gradient. Further along the body, where the streamlines straighten out, the opposite occurs and the fluid flows into a region of rising pressure, an adverse pressure gradient. The increasing pressure gradient pushes against the flow and causes the slowest parts of the flow, those immediately adjacent to the surface, to reverse direction. At this point the boundary layer has separated from the body and the combination of flow in two directions induces a wake of turbulent vortices (see diagram below).

Boundary layer separation over cylinder

The type of flow in the wake depends on the Reynolds number of the flow impinging on the body,

where is the density of the fluid,

is the impinging free stream flow velocity,

is a characteristic length of the body, e.g. the diameter for a sphere or cylinder, and

is the viscosity or inherent stickiness of the fluid. The Reynolds number essentially takes the ratio of inertial forces

to viscous forces

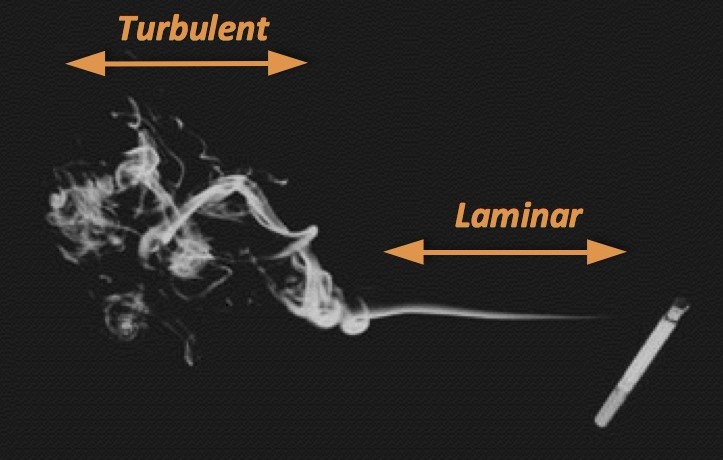

, and captures the extent of laminar flow (layered flow with little mixing) and turbulent flow (flow with strong mixing via vortices).

For example, consider the flow past an infinitely long cylinder protruding out of your screen (as shown in the figure above). For very low Reynolds number flow (Re < 10) the inertial forces are negligible and the streamlines connect smoothly behind the cylinder. As the Reynolds number is increased into the range of Re = 10-40 (by, for example, increasing the free stream velocity ), the boundary layer separates symmetrically from either side of the cylinder, and two eddies form that rotate in opposite directions. These eddies remain fixed and do not “peel away” from the cylinder. Behind the vortices the flow from either side rejoins and the size of the wake is limited to a small region behind the cylinder. As the Reynolds number is further increased into the region Re > 40, the symmetric eddy formation is broken and two asymmetric vortices form. Such an instability is known as a symmetry-breaking bifurcation in stability theory and the ensuing asymmetric vortices undergo periodic oscillations by constantly interchanging their position with respect to the cylinder. At a specific critical value of Reynolds number (Re ~ 100) the eddies start to peel away, alternately from either side of the cylinder, and are then washed downstream. As visualised below, this can produce a rather pretty effect…

This condition of alternately shedding vortices from the sides of the cylinder is known as the von Kármán vortex street. At a certain distance from the cylinder the behaviour obviously dissipates, but close to the cylinder the oscillatory shedding can have profound aeroelastic effects on the structure. Aeroelasticity is the study of how fluid flow and structures interact dynamically. For example, there are two very important locations on an aircraft wing:

– the centre of pressure, i.e. an idealised point of the wing where the lift can be assumed to act as a point load

– the shear centre, i.e. the point of any structural cross-section through which a point load must act to cause pure bending and no twisting

The problem is that the centre of pressure and shear centre are very rarely coincident, and so the aerodynamic lift forces will typically not only bend a wing but also cause it to twist. Twisting in a manner that forces the leading edge upwards increases the angle of attack and thereby increases the lift force. This increased lift force produces more twisting, which produces more lift, and so on. This phenomenon is known as divergence and can cause a wing to twist-off the fuselage.

A different, yet equally pernicious, aeroelastic instability can occur as a result of the von Kármán vortex street. Each time an eddy is shed from the cylinder, the symmetry of the flow pattern is broken and a difference in pressure is induced between the two sides of the cylinder. The vortex shedding therefore produces alternating sideways forces that can cause sideways oscillations. If the frequency of these oscillations is the same as the natural frequency of the cylinder, then the cylinder will undergo resonant behaviour and start vibrating uncontrollably.

So, how does this relate to the fated Tacoma Narrows bridge?

Upon completion, the first Tacoma Narrows suspension bridge, costing $6.4 mill and the third longest bridge of its kind, was described as the fanciest single span bridge in the world. With its narrow towers and thin stiffening trusses the bridge was valued for its grace and slenderness. On the morning of November 7, 1940, only a year into its service, the bridge broke apart in a light gale and crashed into the Puget Sound 190 feet below. From its inaugural day on July 1, 1940 something seemed not quite right. The span of the bridge would start to undulate up and down in light breezes, securing the bridge the nickname “Galloping Gertie”. Engineers tried to stabilise the bridge using heavy steel cables fixed to steel blocks on either side of the span. But to no avail, the galloping continued.

On the morning of the collapse, Gertie was bouncing around in its usual manner. As the winds started to intensify to 60 kmh (40 mph) the rhythmic up and down motion of the bridge suddenly morphed into a violent twisting motion spiralling along the deck. At this point the authorities closed the bridge to any further traffic but the bridge continued to writhe like a corkscrew. The twisting became so violent that the sides of the bridge deck separated by 9 m (28 feet) with the bridge deck oriented at 45° to the horizontal. For half an hour the bridge resisted these oscillatory stresses until at one point the deck of the bridge buckled, girders and steel cables broke loose and the bridge collapsed into the Puget Sound.

After the collapse, the Governor of Washington, Clarence Martin, announced that the bridge had been built correctly and that another one would be built using the same basic design. At this point von Kármán started to feel uneasy and he asked technicians at CalTech to build a small rubber replica of the bridge for him. Von Kármán then tested the bridge at home using a small electric fan. As he varied the speed of the fan, the model started to oscillate, and these oscillations grew greater as the rhythm of the air movement induced by the fan was synchronised with the oscillations.

Indeed, Galloping Gertie had been constructed using cylindrical cable stays and these shed vortices in a periodic manner when a cross-wind reached a specific intensity. Because the bridge was also built using a solid sidewall, the vortices impinged immediately onto a solid section of the bridge, inducing resonant vibrations in the bridge structure.

Von Kármán then contacted the governor and wrote a short piece for the Engineering News Record describing his findings. Later, von Kármán served on the committee that investigated the cause of the collapse and to his surprise the civil engineers were not at all enamoured with his explanation. In all of the engineers’ training and previous engineering experience, the design of bridges had been governed by “static forces” of gravity and constant maximum wind load. The effects of “dynamic loads”, which caused bridges to swing from side to side, had been observed but considered to be negligible. Such design flaws, stemming from ignorance rather than the improper application of design principles, are the most harrowing as the mode of failure is entirely unaccounted for. Fortunately, the meetings adjourned with agreements in place to test the new Tacoma Narrows bridge in a wind tunnel at CalTech, a first at the time. As a result of this work, the solid sidewall of the bridge deck was perforated with holes to prevent vortex shedding, and a number of slots were inserted into the bridge deck to prevent differences in pressure between the top and bottom surfaces of the deck.

The one person that did suffer irrefutable damage to his reputation was the insurance agent that initially underwrote the $6 mill insurance policy for the state of Washington. Figuring that something as big as the Tacoma Narrows bridge would never collapse, he pocketed the insurance premium himself without actually setting up a policy, and ended up in jail…

If you would like to learn more about Theodore von Kármán’s life, I highly recommend his autobiography, which I have reviewed here.

The material we covered in the last two posts (skin friction and pressure drag) allows us to consider a fun little problem:

How quickly do the small bubbles of gas rise in a pint of beer?

To answer this question we will use the concept of aerodynamic drag introduced in the last two posts – namely,

- skin friction drag – frictional forces acting tangential to the flow that arise because of the inherent stickiness (viscosity) of the fluid.

- pressure drag – the difference between the fluid pressure upstream and downstream of the body, which typically occurs because of boundary layer separation and the induced turbulent wake behind the body.

The most important thing to remember is that both skin friction drag and profile drag are influenced by the shape of the boundary layer.

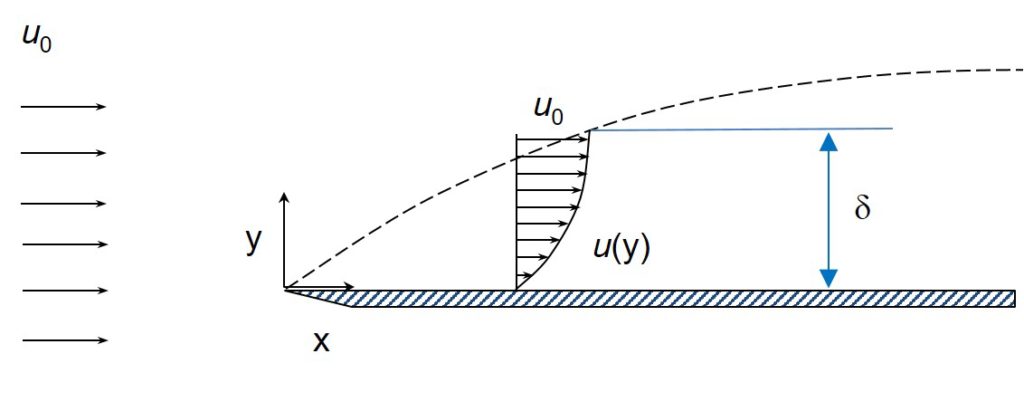

What is this boundary layer?

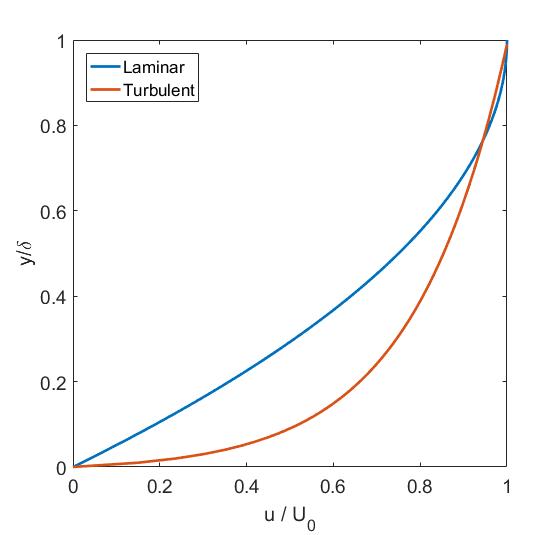

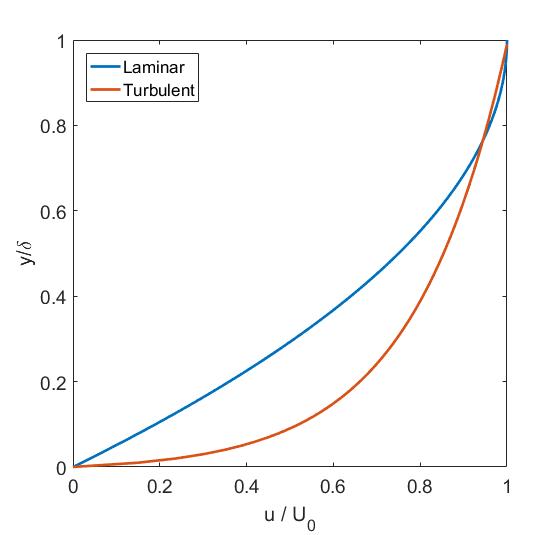

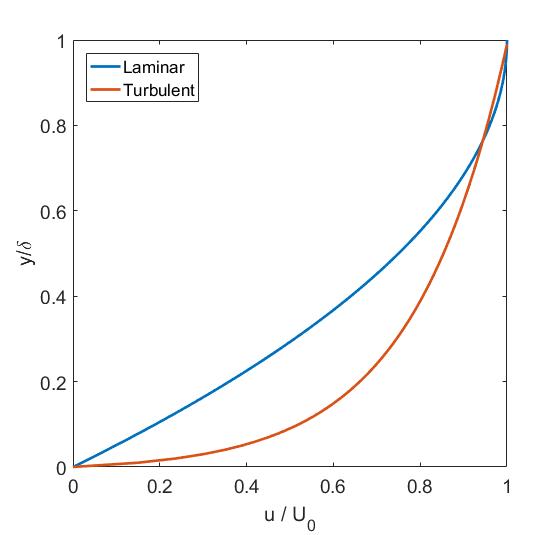

As a fluid flows over a body it sticks to the body’s external surface due to the inherent viscosity of the fluid, and therefore a thin region exists close to the surface where the velocity of the fluid increases from zero to the mainstream velocity. This thin region of the flow is known as the boundary layer and the velocity profile in this region is U-shaped as shown in the figure below.

Velocity profile of laminar versus turbulent boundary layer

As shown in the figure above, the flow in the boundary layer can either be laminar, meaning it flows in stratified layers with no to very little mixing between the layers, or turbulent, meaning there is significant mixing of the flow perpendicular to the surface. Due to the higher degree of momentum transfer between fluid layers in a turbulent boundary layer, the velocity of the flow increases more quickly away from the surface than in a laminar boundary layer. The magnitude of skin friction drag at the surface of the body (y = 0 in the figure above) is given by

where is the so-called velocity gradient, or how quickly the fluid increases its velocity as we move away from the surface. As this velocity gradient at the surface (y = 0 in the figure above) is much steeper for turbulent flow, this type of flow leads to more skin friction drag than laminar flow does.

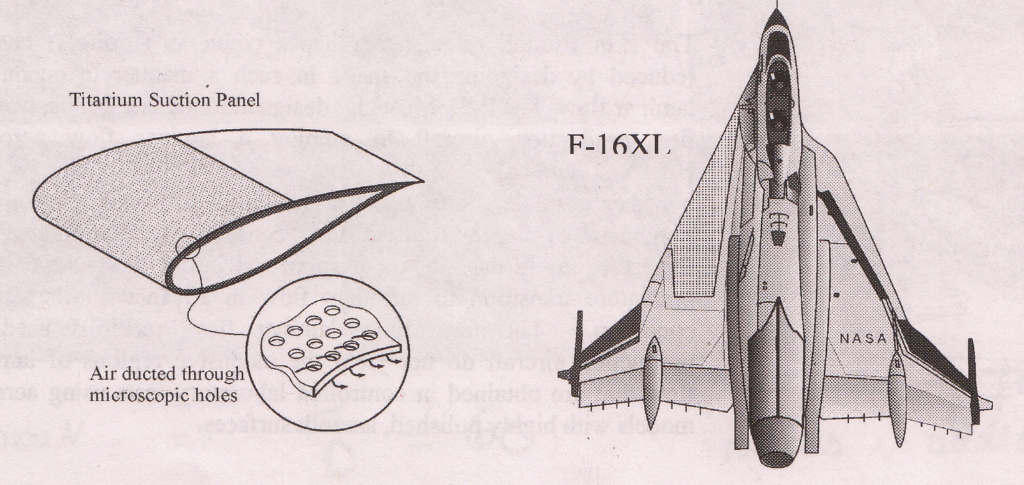

Skin friction drag is the dominant form of drag for objects whose surface area is aligned with the flow direction. Such shapes are called streamlined and include aircraft wings at cruise, fish and low-drag sports cars. For these streamlined bodies it is beneficial to maintain laminar flow over as much of the body as possible in order to minimise aerodynamic drag.

Conversely, pressure drag is the difference between the fluid pressure in front of (upstream) and behind (downstream) the moving body. Right at the tip of any moving body, the fluid comes to a standstill relative to the body (i.e. it sticks to the leading point) and as a result obtains its stagnation pressure.

The stagnation pressure is the pressure of a fluid at rest and, for thermodynamic reasons, this is the highest possible pressure the fluid can obtain under a set of pre-defined conditions. This is why from Bernoulli’s law we know that fluid pressure decreases/increases as the fluid accelerates/decelerates, respectively.

At the trailing edge of the body (i.e. immediately behind it) the pressure of the fluid is naturally lower than this stagnation pressure because the fluid is either flowing smoothly at some finite velocity, hence lower pressure, or is greatly disturbed by large-scale eddies. These large-scale eddies occur due to a phenomenon called boundary layer separation.

Boundary layer separation over a cylinder

Why does the boundary layer separate?

Any body of finite thickness will force the fluid to flow in curved streamlines around it. Towards the leading edge this causes the flow to speed up in order to balance the centripetal forces created by the curved streamlines. This creates a region of falling fluid pressure, also called a favourable pressure gradient. Further along the body, the streamlines straighten out and the opposite phenomenon occurs – the fluid flows into a region of rising pressure, also known as an adverse pressure gradient. This adverse pressure gradient decelerates the flow and causes the slowest parts of the boundary layer, i.e. those parts closest to the surface, to reverse direction. At this point, the boundary layer “separates” from the body and the combination of flow in two directions induces a wake of turbulent vortices; in essence a region of low-pressure fluid.

The reason why this is detrimental for drag is because we now have a lower pressure region behind the body than in front of it, and this pressure difference results in a force that pushes against the direction of travel. The magnitude of this drag force greatly depends on the location of the boundary layer separation point. The further upstream this point, the higher the pressure drag.

To minimise pressure drag it is beneficial to have a turbulent boundary layer. This is because the higher velocity gradient at the external surface of the body in a turbulent boundary layer means that the fluid has more momentum to “fight” the adverse pressure gradient. This extra momentum pushes the point of separation further downstream. Pressure drag is typically the dominant type of drag for bluff bodies, such as golf balls, whose surface area is predominantly perpendicular to the flow direction.

So to summarise: laminar flow minimises skin-friction drag, but turbulent flow minimises pressure drag.

Given this trade-off between skin friction drag and pressure drag, we are of course interested in the total amount of drag, known as the profile drag. The propensity of a specific shape in inducing profile drag is captured in the dimensionless drag coefficient

where is the total drag force acting on the body,

is the density of the fluid,

is the undisturbed mainstream velocity of the flow, and

represents a characteristic area of the body. For bluff bodies

is typically the frontal area of the body, whereas for aerofoils and hydrofoils

is the product of wing span and mean chord. For a flat plate aligned with the flow direction,

is the total surface area of both sides of the plate.

The denominator of the drag coefficient represents the dynamic pressure of the fluid () multiplied by the specific area (

) and is therefore equal to a force. As a result, the drag coefficient is the ratio of two forces, and because the units of the denominator and numerator cancel, we call this a dimensionless number that remains constant for two dynamically similar flows. This means

is independent of body size, and depends only on its shape. As discussed in the wind tunnel post, this mathematical property is why we can create smaller scaled versions of real aircraft and test them in a wind tunnel.

Looking at the diagram above we can start to develop an appreciation for the relative magnitude of pressure drag and skin friction drag for different bodies. The “worst” shape for boundary layer separation is a plate perpendicular to the flow as shown in the first diagram. In this case, drag is clearly dominated by pressure drag with negligible skin friction drag. The situation is similar for the cylinder shown in the second diagram, but in this case the overall profile drag is smaller due to the greater degree of streamlining.

The degree of boundary layer separation, and therefore the wake of eddies behind the cylinder, depends to a large extent on the surface roughness of the body and the Reynolds number of the flow. The Reynolds number is given by

where is the free-stream velocity and

is the characteristic dimension of the body. The reason why the Reynolds number influences boundary layer separation is because it is the dominant factor in influencing the nature, laminar or turbulent, of the boundary layer. The transition from laminar to turbulent boundary layer is different for different problems, but as a general rule of thumb a value of

can be used.

This influence of Reynolds number can be observed by comparing the second diagram to the bottom diagram. The flow over the cylinder in the bottom diagram has increased by a factor of 100 (), thereby increasing the extent of turbulent flow and delaying the onset of boundary layer separation (smaller wake). Hence, the drag coefficient of the bottom cylinder is half the drag coefficient of the cylinder in the second diagram (

) even though the diameter has remained unchanged. Remember though that only the drag coefficient has been halved, whereas the overall drag force will naturally be higher for

because the drag force is a function of

and the velocity

has increased by a factor of 100.

Notice also that the streamlined aircraft wing shown in the third diagram has a much lower drag coefficient. This is because the aircraft wing is essentially a “drawn-out” cylinder of the same “thickness” as the cylinder in the second diagram, but by streamlining (drawing out) its shape, boundary layer separation occurs much further downstream and the size of the wake is much reduced.

Terminal velocity of rising beer bubbles

The terminal velocity is the speed at which the forces accelerating a body equal those decelerating it. For example, the aerodynamic drag acting on a sky diver is proportional to the square of his/her falling velocity. This means that at some point the sky diver reaches a velocity at which the drag force equals the force of gravity, and the sky diver cannot accelerate any further. Hence, the terminal velocity represents the velocity at which the forces accelerating a body are equal to those decelerating it.

Turbulent wake behind a moving sphere. We will model the gas bubbles rising to the top of beer as a sphere moving through a liquid

The net accelerating force of a bubble of air/gas in a liquid is the buoyancy force, i.e. the difference in density between the liquid and the gas. This buoyancy force force is given by

where is the diameter of the spherical gas bubble,

is the density of the gas,

is the density of the liquid and

is the gravitational acceleration

. The buoyancy force essentially expresses the force required to displace a sphere volume

given a certain difference in density between the gas and liquid.

At terminal velocity the buoyancy force is balanced by the total drag acting on the gas bubble. Using the equation for the drag coefficient above we know that the total drag is

where is the terminal velocity and we have replaced

with the frontal area of the gas bubble

, i.e. the area of a circle. Thus, equating

and

and re-arranging for terminal velocity gives us

At this point we can calculate the terminal velocity of a spherical gas bubble driven by buoyancy forces for a certain drag coefficient. The problem now is that the drag coefficient of a sphere is not constant; it changes with the flow velocity. Fortunately, the drag coefficient of a sphere plateaus at around 0.5 for Reynolds numbers (see digram below) and it is reasonable to assume that the flow considered here falls within this range. Some good old engineering judgement at work!

Therefore, the terminal velocity of gas bubbles rising in a beer are somewhere in the range of

and taking the square root

Given that the viscosity of the fluid is around we can now check that we are in the right Reynolds number range:

which is right at the bottom of R = !

So there you have it: Beer bubbles rise at around a foot per second.

Perhaps the next time you gaze pensively into a glass of beer after a hard day’s work, this little fun-fact will give you something else to think (or smile) about.

Acknowledgements

This post is based on a fun little problem that Prof. Gary Lock set his undergraduate students at the University of Bath. Prof. Lock was probably the most entertaining and effective lecturer I had during my undergraduate studies and has influenced my own lecturing style. If I can only pass on a fraction of the passion for engineering and teaching that Prof. Lock instilled in me, I consider my job well done.

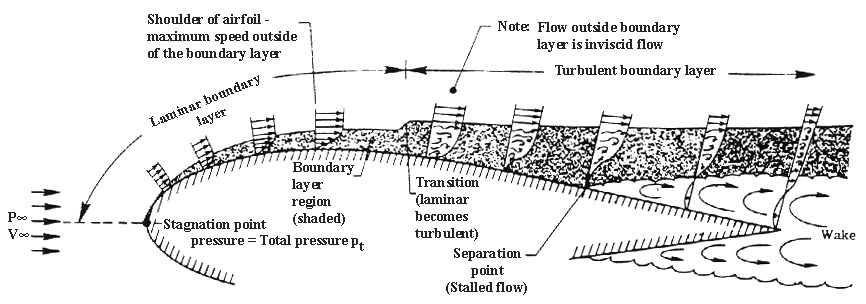

At the start of the 19th century, after studying the highly cambered thin wings of many different birds, Sir George Cayley designed and built the first modern aerofoil, later used on a hand-launched glider. This biomimetic, highly cambered and thin-walled design remained the predominant aerofoil shape for almost 100 years, mainly due to the fact that the actual mechanisms of lift and drag were not understood scientifically but were explored in an empirical fashion. One of the major problems with these early aerofoil designs was that they experienced a phenomenon now known as boundary layer separation at very low angles of attack. This significantly limited the amount of lift that could be created by the wings and meant that bigger and bigger wings were needed to allow for any progress in terms of aircraft size. Lacking the analytical tools to study this problem, aerodynamicists continued to advocate thin aerofoil sections, as there was plenty of evidence in nature to suggest their efficacy. The problem was considered to be more one of degree, i.e. incrementally iterating the aerofoil shapes found in nature, rather than of type, that is designing an entirely new shape of aerofoil in accord with fundamental physics.

During the pre-WWI era, the misguided notions of designers was compounded by the ever-increasing use of wind-tunnel tests. The wind tunnels used at the time were relatively small and ran at very low flow speeds. This meant that the performance of the aerofoils was being tested under the conditions of laminar flow (smooth flow in layers, no mixing perpendicular to flow direction) rather than the turbulent flow (mixing of flow via small vortices) present over the wing surfaces. Under laminar flow conditions, increasing the thickness of an aerofoil increases the amount of skin-friction drag (as shown in last month’s post), and hence thinner aerofoils were considered to be superior.

The modern plane – born in 1915

The situation in Germany changed dramatically during WWI. In 1915 Hugo Junkers pioneered the first practical all-metal aircraft with a cantilevered wing – essentially the same semi-monocoque wing box design used today. The most popular design up to then was the biplane configuration held together by wires and struts, which introduced considerable amounts of parasitic drag and thereby limited the maximum speed of aircraft. Eliminating these supporting struts and wires meant that the flight loads needed to be carried by other means. Junkers cantilevered a beam from either side of the fuselage, the main spar, at about 25% of the chord of the wing to resist the up and down bending loads produced by lift. Then he fitted a smaller second spar, known as the trailing edge spar, at 75% of the chord to assist the main spar in resisting fore and aft bending induced by the drag on the wing. The two spars were connected by the external wing skin to produce a closed box-section known as the wing box. Finally, a curved piece of metal was fitted to the front of the wing to form the “D”-shaped leading edge, and two pieces of metal were run out to form the trailing edge. This series of three closed sections provided the wing with sufficient torsional rigidity to sustain the twisting loads that arise because the centre of pressure (the point where the lift force can be considered to act) is offset from the shear centre (the point where a vertical load will only cause bending and no twisting). Junker’s ideas were all combined in the world’s first practical all-metal aircraft, the Junker J 1, which although much heavier than other aircraft at the time, developed into the predominant form of construction for the larger and faster aircraft of the coming generation.

Structures + Aerodynamics = Superior AircraftJunkers construction naturally resulted in a much thicker wing due to the room required for internal bracing, and this design provided the impetus for novel aerodynamics research. Junker’s ideas were supported by Ludwig Prandtl who carried out his famous aerodynamics work at the University of Göttingen. As discussed in last month’s post, Prandtl had previously introduced the notion of the boundary layer; namely the existence of a U-shaped velocity profile with a no-flow condition at the surface and an increasing velocity field towards the main stream some distance away from the surface. Prandtl argued that the presence of a boundary layer supported the simplifying assumption that fluid flow can be split into two non-interacting portions; a thin layer close to the surface governed by viscosity (the stickiness of the fluid) and an inviscid mainstream. This allowed Prandtl and his colleagues to make much more accurate predictions of the lift and drag performance of specific wing-shapes and greatly helped in the design of German WWI aircraft. In 1917 Prandtl showed that Junker’s thick and less-cambered aerofoil section produced much more favourable lift characteristics than the classic thinner sections used by Germany’s enemies. Second, the thick aerofoil could be flown at a much higher angle of attack without stalling and hence improved the manoeuvrability of a plane during dog fighting.

Skin Friction versus Pressure Drag

The flow in a boundary layer can be either laminar or turbulent. Laminar flow is orderly and stratified without interchange of fluid particles between individual layers, whereas in turbulent flow there is significant exchange of fluid perpendicular to the flow direction. The type of flow greatly influences the physics of the boundary layer. For example, due to the greater extent of mass interchange, a turbulent boundary layer is thicker than a laminar one and also features a steeper velocity gradient close to the surface, i.e. the flow speed increases more quickly as we move away from the wall.

Velocity profile of laminar versus turbulent boundary layer. Note how the turbulent flow increases velocity more rapidly away from the wall.

Just like your hand experiences friction when sliding over a surface, so do layers of fluid in the boundary layer, i.e. the slower regions of the flow are holding back the faster regions. This means that the velocity gradient throughout the boundary layer gives rise to internal shear stresses that are akin to friction acting on a surface. This type of friction is aptly called skin-friction drag and is predominant in streamlined flows where the majority of the body’s surface is aligned with the flow. As the velocity gradient at the surface is greater for turbulent than laminar flow, a streamlined body experiences more drag when the boundary layer flow over its surfaces is turbulent. A typical example of a streamlined body is an aircraft wing at cruise, and hence it is no surprise that maintaining laminar flow over aircraft wings is an ongoing research topic.

Over flat surfaces we can suitably ignore any changes in pressure in the flow direction. Under these conditions, the boundary layer remains stable but grows in thickness in the flow direction. This is, of course, an idealised scenario and in real-world applications, such as curved wings, the flow is most likely experiencing an adverse pressure gradient, i.e. the pressure increases in the flow direction. Under these conditions the boundary layer can become unstable and separate from the surface. The boundary layer separation induces a second type of drag, known as pressure drag. This type of drag is predominant for non-streamlined bodies, e.g. a golfball flying through the air or an aircraft wing at a high angle of attack.

So why does the flow separate in the first place?

To answer this question consider fluid flow over a cylinder. Right at the front of the cylinder fluid particles must come to rest. This point is aptly called the stagnation point and is the point of maximum pressure (to conserve energy the pressure needs to fall as fluid velocity increases, and vice versa). Further downstream, the curvature of the cylinder causes the flow lines to curve, and in order to equilibrate the centripetal forces, the flow accelerates and the fluid pressure drops. Hence, an area of accelerating flow and falling pressure occurs between the stagnation point and the poles of the cylinder. Once the flow passes the poles, the curvature of the cylinder is less effective at directing the flow in curved streamlines due to all the open space downstream of the cylinder. Hence, the curvature in the flow reduces and the flow slows down, turning the previously favourable pressure gradient into an adverse pressure gradient of rising pressure.

Boundary layer separation over a cylinder (axis out out the page).

To understand boundary layer separation we need to understand how these favourable and adverse pressure gradients influence the shape of the boundary layer. From our discussion on boundary layers, we know that the fluid travels slower the closer we are to the surface due to the retarding action of the no-slip condition at the wall. In a favourable pressure gradient, the falling pressure along the streamlines helps to urge the fluid along, thereby overcoming some of the decelerating effects of the fluid’s viscosity. As a result, the fluid is not decelerated as much close to the wall leading to a fuller U-shaped velocity profile, and the boundary layer grows more slowly.

By analogy, the opposite occurs for an adverse pressure gradient, i.e. the mainstream pressure increases in the flow direction retarding the flow in the boundary layer. So in the case of an adverse pressure gradient the pressure forces reinforce the retarding viscous friction forces close to the surface. As a result, the difference between the flow velocity close to the wall and the mainstream is more pronounced and the boundary layer grows more quickly. If the adverse pressure gradient acts over a sufficiently extended distance, the deceleration in the flow will be sufficient to reverse the direction of flow in the boundary layer. Hence the boundary layer develops a point of inflection, known as the point of boundary layer separation, beyond which a circular flow pattern is established.

For aircraft wings, boundary layer separation can lead to very significant consequences ranging from an increase in pressure drag to a dramatic loss of lift, known as aerodynamic stall. The shape of an aircraft wing is essentially an elongated and perhaps asymmetric version of the cylinder shown above. Hence the airflow over the top convex surface of a wing follows the same basic principles outlined above:

- There is a point of stagnation at the leading edge.

- A region of accelerating mainstream flow (favourable pressure gradient) up to the point of maximum thickness.

- A region of decelerating mainstream flow (adverse pressure gradient) beyond the point of maximum thickness.

These three points are summarised in the schematic diagram below.

Boundary layer separation over the top surface of a wing.

Boundary layer separation is an important issue for aircraft wings as it induces a large wake that completely changes the flow downstream of the point of separation. Skin-friction drag arises due to inherent viscosity of the fluid, i.e. the fluid sticks to the surface of the wing and the associated frictional shear stress exerts a drag force. When a boundary layer separates, a drag force is induced as a result of differences in pressure upstream and downstream of the wing. The overall dimensions of the wake, and therefore the magnitude of pressure drag, depends on the point of separation along the wing. The velocity profiles of turbulent and laminar boundary layers (see image above) show that the velocity of the fluid increases much slower away from the wall for a laminar boundary layer. As a result, the flow in a laminar boundary layer will reverse direction much earlier in the presence of an adverse pressure gradient than the flow in a turbulent boundary layer.

To summarise, we now know that the inherent viscosity of a fluid leads to the presence of a boundary layer that has two possible sources of drag. Skin-friction drag due to the frictional shear stress between the fluid and the surface, and pressure drag due to flow separation and the existence of a downstream wake. As the total drag is the sum of these two effects, the aerodynamicist is faced with a non-trivial compromise:

- skin-friction drag is reduced by laminar flow due to a lower shear stress at the wall, but this increases pressure drag when boundary layer separation occurs.

- pressure drag is reduced by turbulent flow by delaying boundary layer separation, but this increases the skin-friction drag due to higher shear stresses at the wall.

As a result, neither laminar nor turbulent flow can be said to be preferable in general and judgement has to be made regarding the specific application. For a blunt body, such as a cylinder, pressure drag dominates and therefore a turbulent boundary layer is preferable. For more streamlined bodies, such as an aircraft wing at cruise, the overall drag is dominated by skin-friction drag and hence a laminar boundary layer is preferable. Dolphins, for example, have very streamlined bodies to maintain laminar flow. Early golfers, on the other hand, realised that worn rubber golf balls flew further than pristine ones, and this led to the innovation of dimples on golf balls. Fluid flow over golf balls is predominantly laminar due to the relatively low flight speeds. Dimples are therefore nothing more than small imperfections that transform the predominantly laminar flow into a turbulent one that delays the onset of boundary layer separation and therefore reduces pressure drag.

Aerodynamic Stall