This is the third in a series of posts on rocket science. Part I covered the history of rocketry and Part II dealt with the operating principles of rockets. If you have not checked out the latter post, I highly recommend you read this first before diving into what is to follow.

We have established that designing a powerful rocket means suspending a bunch of highly reactant chemicals above an ultralight means of combustion. In terms of metrics this means that a rocket scientist is looking to

- Maximise the mass ratio to achieve the highest amounts of delta-v. This translates to carrying the maximum amount of fuel with minimum supporting structure to maximise the achievable change in velocity of the rocket.

- Maximise the specific impulse of the propellant. The higher the specific impulse of the fuel the greater the exhaust velocity of the hot gases and consequently the greater the momentum thrust of the engine.

- Optimise the shape of the exhaust nozzle to produce the highest amounts of pressure thrust.

- Optimise the staging strategy to reach a compromise between the upside of staging in terms of shedding useless mass and the downside of extra technical complexity involved in joining multiple rocket engines (such complexity typically adds mass).

- Minimise the dry mass costs of the rocket either by manufacturing simple expendable rockets at scale or by building reusable rockets.

These operational principles set the landscape of what type of rocket we want to design. In designing chemical rockets some of the pertinent questions we need to answer are

- What propellants to use for the most potent reaction?

- How to expel and direct the exhaust gases most efficiently?

- How to minimise the mass of the structure?

Here, we will turn to the propulsive side of things and answer the first of these two questions.

Propellant

In a chemical rocket an exothermic reaction of typically two different chemicals is used to create high-pressure gases which are then directed through a nozzle and converted into a high-velocity directed jet.

From the Tsiolkovsky rocket equation we know that the momentum thrust depends on the mass flow rate of the propellants and the exhaust velocity,

The most common types of propellant are:

- Monopropellant: a single pressurised gas or liquid fuel that disassociates when a catalyst is introduced. Examples include hydrazine, nitrous oxide and hydrogen peroxide.

- Hypergolic propellant: two liquids that spontaneously react when combined and release energy without requiring external ignition to start the reaction.

- Fuel and oxidiser propellant: a combination of two liquids or two solids, a fuel and an oxidiser, that react when ignited. Combinations of solid fuel and liquid oxidiser are also possible as a hybrid propellant system. Typical fuels include liquid hydrogen and kerosene, while liquid oxygen and nitric acid are often used as oxidisers. In liquid propellant rockets the oxidiser and fuel are typically stored separately and mixed upon ignition in the combustion chamber, whereas solid propellant rockets are designed premixed.

Rockets can of course be powered by sources other than chemical reactions. Examples of this are smaller, low performance rockets such as attitude control thruster, that use escaping pressurised fluids to provide thrust. Similarly, a rocket may be powered by heating steam that then escapes through a propelling nozzle. However, the focus here is purely on chemical rockets.

Solid propellants

Solid propellants are made of a mixture of different chemicals that are blended into a liquid, poured into a cast and then cured into a solid. At its simplest, these chemical blends or “composites” are comprised of four different functional ingredients:

- Solid oxidiser granules.

- Flakes or powders of exothermic compounds.

- Polymer binding agent.

- Additives to stabilise or modify the burn rate.

Gunpowder is an example of a solid propellant that does not use a polymer binding agent to hold the propellant together. Rather the charcoal fuel and potassium nitrate oxidiser are compressed to hold their shape. A popular solid rocket fuel is ammonium perchlorate composite propellant (APCP) which uses a mixture of 70% granular ammonium perchlorate as an oxidiser, with 20% aluminium powder as a fuel, bound together using 10% polybutadiene acrylonitrile (PBAN).

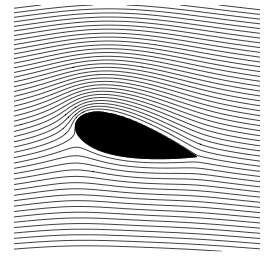

Solid propellant rocket components (via Wikimedia Commons URL)

Solid propellant rockets have been used much less frequently than liquid fuel rockets. However, there are some advantages, which can make solid propellants favourable to liquid propellants in some military applications (e.g. intercontinental ballistic missiles, ICBMs). Some of the advantages of solid propellants are that:

- They are easier to store and handle.

- They are simpler to operate with.

- They have less components. There is no need for a separate combustion chamber and turbo pumps to pump the propellants into the combustion chamber. The solid propellant (also called “grain”) is ignited directly in the propellant storage casing.

- They are much denser than liquid propellants and therefore reduce the fuel tank size (lower mass). Furthermore, solid propellants can be used as a load-bearing component, which further reduces the structural weight of the rocket. The cured solid propellant can readily be encased in a filament-wound composite rocket shell, which has more favourable strength-to-weight properties of the metallic rocket shells typically used for liquid rockets.

Apart from their use as ICBMs, solid rockets are known for their role as boosters. The simplicity and relatively low cost compared with liquid-fuel rockets means that solid rockets are a better choice when large amounts of cheap additional thrust is required. For example, the Space Shuttle used two solid rocket boosters to complement the onboard liquid propellant engines.

The disadvantage of solid propellants is that their specific impulse, and hence the amount of thrust produced per unit mass of fuel, is lower than for liquid propellants. The mass ratio of solid rockets can actually be greater than that of liquid rockets as a result of the more compact design and lower structural mass, but the exhaust velocities are much lower. The combustion process in solid rockets depends on the surface area of the fuel, and as such any air bubbles, cracks or voids in the solid propellant cast need to be prevented. Therefore, quite expensive quality assurance measures such as ultrasonic inspection or x-rays are required to assure the quality of the cast. The second problem with air bubbles in the cast is that the amount of oxidiser is increased (via the oxygen in the air) which results in local temperature hot spots and increased burn rate. Such local imbalances can spiral out of control to produce excessive temperatures and pressures, and ultimately lead to catastrophic failure. Another disadvantage of solid propellants are their binary operation mode. Once the chemical reaction has started and the engines have been ignited, it is very hard to throttle back or control the reaction. The propellant can be arranged in a manner to provide a predetermined thrust profile, but once this has started it is much hard to make adjustments on the fly. Liquid propellant rockets on the other hand use turbo pumps to throttle the propellant flow.

Liquid propellants

Liquid propellants have more favourable specific impulse measures than solid rockets. As such they are more efficient at propelling the rocket for a unit mass of reactant mass. This performance advantage is due to the superior oxidising capabilities of liquid oxidisers. For example, traditional liquid oxidisers such as liquid oxygen or hydrogen peroxide result in higher specific impulse measures than the ammonium perchlorate in solid rockets. Furthermore, as the liquid fuel and oxidiser are pumped into the combustion chamber, a liquid-fuelled rocket can be throttled, stopped and restarted much like a car or a jet engine. In liquid-fuelled rockets the combustion process is restricted to the combustion chamber, such that only this part of the rocket is exposed to the high pressure and temperature loads, whereas in solid-fuelled rockets the propellant tanks themselves are subjected to high pressures. Liquid propellants are also cheaper than solid propellants as they can be sourced from the upper atmosphere and require relatively little refinement compared to the composite manufacturing process of solid propellants. However, the cost of the propellant only accounts for around 10% of the total cost of the rocket and therefore these savings are typically negligible. Incidentally, the high proportion of costs associated with the structural mass of the rocket is why re-usability of rocket stages is such an important factor in reducing the cost of spaceflight.

The main drawback of liquid propellants is the difficulty of storage. Traditional liquid oxidisers are highly reactive and very toxic such that they need to be handled with care and properly insulated from other reactive materials. Second, the most common oxidiser, liquid oxygen, needs to be stored at very low cryogenic temperatures and this increases the complexity of the rocket design. What is more, additional components such as turbopumps and the associated valves and seals are needed that are entirely absent from solid-fuelled rockets.Modern spaceflight is dominated by two liquid propellant mixtures:

- Liquid oxygen (LOX) and kerosene (RP-1): As discussed in the previous post this mix of oxidiser and fuel is predominantly used for lower stages (i.e. to get off the launch pad), due to the higher density of kerosene compared to liquid hydrogen. Kerosene, as a higher density fuel, allows for better ratios of propellant to tankage mass which is favourable for the mass ratio. Second, high density fuels work better in an atmospheric pressure environment. Historically, the Atlas V, Saturn V and Soyuz rockets have used LOX and RP-1 for the first stages and so does the SpaceX Falcon rocket today.

- Liquid oxygen and liquid hydrogen: This combination is mostly used for the upper stages that propel a vehicle into orbit. The lower density of the liquid hydrogen requires higher expansion ratios (gas pressure – atmospheric pressure) and therefore works more efficiently at higher altitudes. The Atlas V, Saturn V and modern Delta family or rockets all used this propellant mix for the upper rocket stages.

The choice of propellant mixture for different stages requires certain tradeoffs. Liquid hydrogen provides higher specific impulse than kerosene, but its density is around 7 times lower and therefore liquid hydrogen occupies much more space for the same mass of fuel. As a result, the required volume and associated mass of tankage, fuel pumps and pipes is much greater. Both the the specific impulse of the propellant and tankage mass influence the potential delta-v of the rocket, and hence liquid hydrogen, chemically the more efficient fuel, is not necessarily the best option for all rockets.

Although the exact choice of fuel is not straightforward I will propose two general rules of thumb that explain why kerosene is used for the early stages and liquid hydrogen for the upper stages:

- In general, the denser the fuel the heavier the rocket on the launch pad. This means that the rocket needs to provide more thrust to get off the ground and it carries this greater amount of thrust throughout the entire duration of the burn. As fuel is being depleted, the greater thrust of denser fuel rockets means that the rocket reaches orbit earlier and as a result minimises drag losses in the atmosphere.

- Liquid hydrogen fuelled rockets generally produce the lightest design and are therefore used on those parts of the spacecraft that actually need to be propelled into orbit or escape Earth’s gravity to venture into deep space.

Engine and Nozzle

In combustive rockets, the chemical reaction between the fuel and oxidiser creates a high temperature, high pressure gas inside the combustion chamber. If the combustion chamber were closed and symmetric, the internal pressure acting on the chamber walls would cause equal force in all directions and the rocket would remain stationary. For anything interesting to happen we must therefore open one end of the combustion chamber to allow the hot gases to escape. As a result of the hot gases pressing against the wall opposite to the opening, a net force in the direction of the closed end is induced.

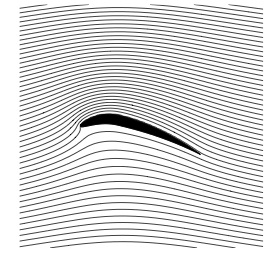

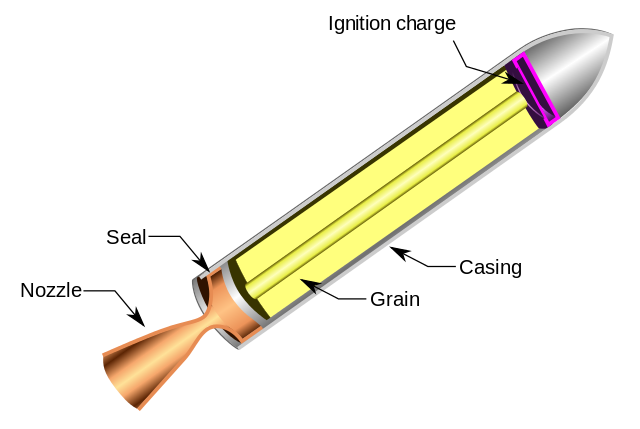

Rocket pioneers, such as Goddard, realised early on that the shape of the nozzle is of crucial importance in creating maximum thrust. A converging nozzle accelerates the escaping gases by means of the conservation of mass. However, converging nozzles are fundamentally limited to fluid flows of Mach 1, the speed of sound, and this is known as the choke condition. In this case, the nozzle provides relatively little thrust and the rocket is purely propelled by the net force acting on the close combustion chamber wall.To further accelerate the flow, a divergent nozzle is required at the choke point. A convergent-divergent nozzle can therefore be used to create faster fluid flows. Crucially, the Tsiolkovsky rocket equation (conservation of momentum) indicates that the exit velocity of the hot gases is directly proportional to the amount of thrust produced. A second advantage is that the escaping gases also provide a force in the direction of flight by pushing on the divergent section of the nozzle.

Underexpanded, perfectly expanded, overexpanded and grossly overexpanded de Laval nozzles (via Wikimedia Commons).

In an ideal world a rocket would continuously operate at peak efficiency, the condition where the nozzle is perfectly expanded throughout the entire flight. This can intuitively be explained using the rocket thrust equation introduced in the previous post:

Peak efficiency of the rocket engine occurs when such that the pressure thrust contribution is equal to zero. This is the condition of peak efficiency as the contribution of the momentum thrust is maximised while removing any penalties from over- or underexpanding the nozzle. An underexpanded nozzle means that

, and while this condition provides extra pressure thrust,

is lower and some of the energy that has gone into combusting the gases has not been converted into kinetic energy. In an overexpanded nozzle the pressure differential is negative,

. In this case,

is fully developed but the overexpansion induces a drag force on the rocket. If the nozzle is grossly overexpanded such that a shock wave occurs inside the nozzle,

may still be greater than

but the supersonic jet separates from the divergent nozzle prematurely (see diagram below) such that

decreases. In outer space

decreases and therefore the thrust created by the nozzle increases. However,

is also decreasing as the flow separates earlier from the divergent nozzle. Thus, some of the increased efficiency of reduced ambient pressure is negated.

A perfectly expanded nozzle is only possible using a variable throat area or variable exit area nozzle to counteract the ambient pressure decrease with gaining altitude. As a result, fixed area nozzles become progressively underexpanded as the ambient pressure decreases during flight, and this means most nozzles are grossly overexpanded at takeoff. Some various exotic nozzles such as plug nozzles, stepped nozzles and aerospikes have been proposed to adapt to changes in ambient pressure and increasing thrust at higher altitudes. The extreme scenario obviously occurs once the rocket has left the Earth’s atmosphere. The nozzle is now so grossly overexpanded that the extra weight of the nozzle structure outweighs any performance gained from the divergent section.

Thus we can see that just as in the case of the propellants the design of individual components is not a straightforward matter and requires detailed tradeoffs between different configurations. This is what makes rocket science such a difficult endeavour.

In a previous post we covered the history of rocketry over the last 2000 years. By means of the Tsiolkovsky rocket equation we also established that the thrust produced by a rocket is equal to the mass flow rate of the expelled gases multiplied by their exit velocity. In this way, chemically fuelled rockets are much like traditional jet engines: an oxidising agent and fuel are combusted at high pressure in a combustion chamber and then ejected at high velocity. So the means of producing thrust are similar, but the mechanism varies slightly:

- Jet engine: A multistage compressor increases the pressure of the air impinging on the engine nacelle. The compressed air is mixed with fuel and then combusted in the combustion chamber. The hot gases are expanded in a turbine and the energy extracted from the turbine is used to power the compressor. The mass flow rate and velocity of the gases leaving the jet engine determine the thrust.

- Chemical rocket engine: A rocket differs from the standard jet engine in that the oxidiser is also carried on board. This means that rockets work in the absence of atmospheric oxygen, i.e. in space. The rocket propellants can be in solid form ignited directly in the propellant storage tank, or in liquid form pumped into a combustion chamber at high pressure and then ignited. Compared to standard jet engines, rocket engines have much higher specific thrust (thrust per unit weight), but are less fuel efficient.

![A turbojet engine [1].](https://aerospaceengineeringblog.com/wp-content/uploads/2016/04/JetEngine.jpg)

A turbojet engine [1].

![A liquid propellant rocket engine [1].](https://aerospaceengineeringblog.com/wp-content/uploads/2016/04/RocketEngine.jpg)

A liquid propellant rocket engine [1].

In this post we will have a closer look at the operating principles and equations that govern rocket design. An introduction to rocket science if you will…

The fundamental operating principle of rockets can be summarised by Newton’s laws of motion. The three laws:

- Objects at rest remain at rest and objects in motion remain at constant velocity unless acted upon by an unbalanced force.

- Force equals mass times acceleration (or

).

- For every action there is an equal and opposite reaction.

are known to every high school physics student. But how exactly to they relate to the motion of rockets?

Let us start with the two qualitative equations (the first and third laws), and then return to the more quantitative second law.

Well, the first law simply states that to change the velocity of the rocket, from rest or a finite non-zero velocity, we require the action of an unbalanced force. Hence, the thrust produced by the rocket engines must be greater than the forces slowing the rocket down (friction) or pulling it back to earth (gravity). Fundamentally, Newton’s first law applies to the expulsion of the propellants. The internal pressure of the combustion inside the rocket must be greater than the outside atmospheric pressure in order for the gases to escape through the rocket nozzle.

A more interesting implication of Newton’s first law is the concept escape velocity. As the force of gravity reduces with the square of the distance from the centre of the earth (), and drag on a spacecraft is basically negligible once outside the Earth’s atmosphere, a rocket travelling at 40,270 km/hr (or 25,023 mph) will eventually escape the pull of Earth’s gravity, even when the rocket’s engines have been switched off. With the engines switched off, the gravitational pull of earth is slowing down the rocket. But as the rocket is flying away from Earth, the gravitational pull is simultaneously decreasing at a quadratic rate. When starting at the escape velocity, the initial inertia of the rocket is sufficient to guarantee that the gravitational pull decays to a negligible value before the rocket comes to a standstill. Currently, the spacecraft Voyager 1 and 2 are on separate journeys to outer space after having been accelerated beyond escape velocity.

At face value, Newton’s third law, the principle of action and reaction, is seemingly intuitive in the case of rockets. The action is the force of the hot, highly directed exhaust gases in one direction, which, as a reaction, causes the rocket to accelerate in the opposite direction. When we walk, our feet push against the ground, and as a reaction the surface of the Earth acts against us to propel us forward.

So what does a rocket “push” against? The molecules in the surrounding air? But if that’s the case, then why do rockets work in space?

The thrust produced by a rocket is a reaction to mass being hurled in one direction (i.e. to conserve momentum, more on that later) and not a result of the exhaust gases interacting directly with the surrounding atmosphere. As the rockets exhaust is entirely comprised of propellant originally carried on board, a rocket essentially propels itself by expelling parts of its mass at high speed in the opposite direction of the intended motion. This “self-cannibalisation” is why rockets work in the vacuum of space, when there is nothing to push against. So the rocket doesn’t push against the air behind it at all, even when inside the Earth’s atmosphere.

Newton’s second law gives us a feeling for how much thrust is produced by the rocket. The thrust is equal to the mass of the burned propellants multiplied by their acceleration. The capability of rockets to take-off and land vertically is testament to their high thrust-to-weight ratios. Compare this to commercial jumbo or military fighter jets which use jet engines to produce high forward velocity, while the upwards lift is purely provided by the aerodynamic profile of the aircraft (fuselage and wings). Vertical take-off and landing (VTOL) aircraft such as the Harrier Jump jet are the rare exception.

At any time during the flight, the thrust-to-weight ratio is equal to the acceleration of the rocket. From Newton’s second law,

where is the net thrust of the rocket (engine thrust minus drag) and

is the instantaneous mass of the rocket. As propellant is burned, the mass

of the rocket decreases such that the highest accelerations of the rocket are achieved towards the end of a burn. On the flipside, the rocket is heaviest on the launch pad such that the engines have to produce maximum thrust to get the rocket away from the launch pad quickly (determined by the net acceleration

).

However, Newton’s second law only applies to each instantaneous moment in time. It does not allow us to make predictions of the rocket velocity as fuel is depleted. Mass is considered to be constant in Newton’s second law, and therefore it does not account for the fact that the rocket accelerates more as fuel inside the rocket is depleted.

The rocket equation

The Tsiolkovsky rocket equation, however, takes this into account. The motion of the rocket is governed by the conservation of momentum. When the rocket and internal gases are moving as one unit, the overall momentum, the product of mass and velocity, is equal to . Thus, for a total mass of rocket and gas

moving at velocity

As the gases are expelled through the rear of the rocket, the overall momentum of the rocket and fuel has to remain constant as long as no external forces act on the system. Thus, if a very small amount of gas is expelled at velocity

relative to the rocket (either in the direction of

or in the opposite direction), the overall momentum of the system (sum of rocket and expelled gas) is

As has to equal

to conserve momentum

and by isolating the change in rocket velocity

The negative sign in the equation above indicates that the rocket always changes velocity in the opposite direction of the expelled gas, as intuitively expected. So if the gas is expelled in the opposite direction of the rocket motion (so

is negative), then the change in the rocket velocity will be positive and it will accelerate.

At any time the quantity

is equal to the residual mass of the rocket (dry mass + propellant) and

denotes it change. If we assume that the expelled velocity of the gas remains constant throughout, we can easily find the incremental change in velocity as the rocket changes from an initial mass

to a final mass

. So,

This equation is known as the Tsiolkovsky rocket equation and is applicable to any body that accelerates by expelling part of its mass at a specific velocity. Even though the expulsion velocity may not remain constant during a real rocket launch we can refer to an effective exhaust velocity that represent a mean value over the course of the flight.

The Tsiolkovsky rocket equation shows that the change in velocity attainable is a function of the exhaust jet velocity and the ratio of original take-off mass (structural weight + fuel = ) to its final mass (structural mass + residual fuel =

). If all of the propellant is burned, the mass ratio expresses how much of the total mass is structural mass, and therefore provides some insight into the efficiency of the rocket.

In a nutshell, the greater the ratio of fuel to structural mass, the more propellant is available to accelerate the rocket and therefore the greater the maximum velocity of the rocket.

So in the ideal case we want a bunch of highly reactant chemicals magically suspended above an ultralight means of combusting said fuel.

In reality this means we are looking for a rocket propelled by a fuel with high efficiency of turning chemical energy into kinetic energy, contained within a lightweight tankage structure and combusted by a lightweight rocket engine. But more on that later!

Thrust

Often, we are more interested in the thrust created by the rocket and its associated acceleration . By dividing the rocket equation above by a small time increment

and again assuming

to remain constant

and the associated thrust acting on the rocket is

where is the mass flow rate of gas exiting the rocket. If the differences in exit pressure of the combustion gases and surrounding ambient pressure are accounted for this becomes:

where is the jet velocity at the nozzle exit plane,

is the flow area at the nozzle exit plane, i.e. the cross-sectional area of the flow where it separates from the nozzle,

is the static pressure of the exhaust jet at the nozzle exit plane and

the pressure of the surrounding atmosphere.

This equation provides some additional physical insight. The term is the momentum thrust which is constant for a given throttle setting. The difference in gas exit and ambient pressure multiplied by the nozzle area provides additional thrust known as pressure thrust. With increasing altitude the ambient pressure decreases, and as a result, the pressure thrust increases. So rockets actually perform better in space because the ambient pressure around the rocket is negligibly small. However,

also decreases in space as the jet exhaust separates earlier from the nozzle due to overexpansion of the exhaust jet. For now it will suffice to say that pressure thrust typically increases by around 30% from launchpad to leaving the atmosphere, but we will return to physics behind this in the next post.

Impulse and specific impulse

The overall amount of thrust is typically not used as an indicator for rocket performance. Better indicators of an engine’s performance are the total and specific impulse figures. Ignoring any external forces (gravity, drag, etc.) the impulse is equal to the change in momentum of the rocket (mass times velocity) and is therefore a better metric to gauge how much mass the rocket can propel and to what maximum velocity. For a change in momentum the impulse is

So to maximise the impulse imparted on the rocket we want to maximise the amount of thrust acting over the burn interval

. If the burn period is broken into a number of finite increments, then the total impulse is given by

Therefore, impulse is additive and the total impulse of a multistage rocket is equal to the sum of the impulse imparted by each individual stage.

By specific impulse we mean the net impulse imparted by a unit mass of propellant. It’s the efficiency with which combustion of the propellant can be converted into impulse. The specific impulse is therefore a metric related to a specific propellant system (fuel + oxidiser) and essentially normalises the exhaust velocity by the acceleration of gravity that it needs to overcome:

where is the effective exhaust velocity and

=9.81. Different fuel and oxidiser combinations have different values of

and therefore different exhaust velocities.

A typical liquid hydrogen/liquid oxygen rocket will achieve an around 450 s with exhaust velocities approaching 4500 m/s, whereas kerosene and liquid oxygen combinations are slightly less efficient with

around 350 s and

around 3500 m/s. Of course, a propellant with higher values of

is more efficient as more thrust is produced per unit of propellant.

Delta-v and mass ratios

The Tsiolkovsky rocket equation can be used to calculate the theoretical upper limit in total velocity change, called delta-v, for a certain amount of propellant mass burn at a constant exhaust velocity . At an altitude of 200 km an object needs to travel at 7.8 km/s to inject into low earth orbit (LEO). If we start from rest, this means a delta-v equal to 7.8 km/s. Accounting for frictional losses and gravity, the actual requirement rocket scientists need to design for is just shy of delta-v=10 km/s. So assuming a lower bound effective exhaust velocity of 3500 m/s, we require a mass ratio of…

to reach LEO. This means that the original rocket on the launch pad is 17.4 times heavier than when all the rocket fuel is depleted!

Just to put this into perspective, this means that the mass of fuel inside the rocket is SIXTEEN times greater than the dry structural mass of tanks, payload, engine, guidance systems etc. That’s a lot of fuel!

Delta-v figures required for rendezvous in the solar system. Note the delta-v to get to the Moon is approximately 10 + 4.1 + 0.7 + 1.6 = 16.4 km/s and thus requires a whopping mass ratio of 108.4 at an effective exhaust velocity of 3500 m/s.

is known as the mass ratio. In some cases, the reciprocal of the mass ratio is used to calculate the mass fraction:

The mass fraction is necessarily always smaller than 1, and in the above case is equal to .

So 94% of this rocket’s mass is fuel!

Such figures are by no means out of the ordinary. In fact, the Space Shuttle had a mass ratio in this ballpark (15.4 = 93.5% fuel) and Europe’s Ariane V rocket has a mass ratio of 39.9 (97.5% fuel).

If anything, flying a rocket means being perched precariously on top of a sea of highly explosive chemicals!

The reason for the incredibly high amount of fuel is the exponential term in the above equation. The good thing is that adding fuel means we have an exponential law working in our favour: For each extra gram of fuel we can pack into the rocket we get a superlinear (better than linear) increase in delta-v. On the downside, for every piece of extra equipment, e.g. payload, we stick into the rocket we get an equally exponential reduction in delta-v.

In reality, the situation is obviously more complex. The point of a rocket is to carry a certain payload into space and the distance we want to travel is governed by a specific amount of delta-v (see figure to the right). For example, getting to the Moon requires a delta-v of approximately 16.4 km/s which implies a whopping mass ratio of 108.4. Therefore, if we wish to increase the payload mass, we need to simultaneously increase propellant mass to keep the mass ratio at 108.4. However, increasing the amount of fuel increases the loads acting on the rocket, and therefore more structural mass is required to safely get the rocket to the Moon. Of course, increasing structural mass similarly increases our fuel requirement, and off we go on a nice feedback loop…

This simple example explains why the mass ratio is a key indicator of a rocket’s structural efficiency. The higher the mass ratio the greater the ratio of delta-v producing propellant to non-delta-v producing structural mass. All other factors being equal, this suggests that a high mass ratio rocket is more efficient because less structural mass is needed to carry a set amount of propellant.

The optimal rocket is therefore propelled by high specific impulse fuel mixture (for high exhaust velocity), with minimal structural requirements to contain the propellant and resist flight loads, and minimal requirements for additional auxiliary components such as guidance systems, attitude control, etc.

For this reason, early rocket stages typically use high-density propellants. The higher density means the propellants take up less space per unit mass. As a result, the tank structure holding the propellant is more compact as well. For example, the Saturn V rocket used the slightly lower specific impulse combination of kerosene and liquid oxygen for the first stage, and the higher specific impulse propellants liquid hydrogen and liquid oxygen for later stages.

Closely related to this, is the idea of staging. Once, a certain amount of fuel within the tanks has been used up, it is beneficial to shed the unnecessary structural mass that was previously used to contain the fuel but is no longer contributing to delta-v. In fact, for high delta-v missions, such as getting into orbit, the total dry-mass of the rockets we use today is too great to be able to accelerate to the desired delta-v. Hence, the idea of multi-stage rockets. We connect multiple rockets in stages, incrementally discarding those parts of the structural mass that are no longer needed, thereby increasing the mass ratio and delta-v capacity of the residual pieces of the rocket.

Cost

The cost of getting a rocket on to the launch pad can roughly be split into three components:

- Propellant cost.

- Cost of dry mass, i.e. rocket casing, engines and auxiliary units.

- Operational and labour costs.

As we saw in the last section, more than 90% of a rocket take-off mass is propellant. However, the specific cost (cost per kg) of the propellants is multiple orders of magnitude smaller than the cost per unit mass of the rocket dry mass mass, i.e. the raw material costs and operational costs required to manufacture and test them. A typical propellant combination of kerosene and liquid oxygen costs around $2/kg, whereas the dry mass cost of an unmanned orbital vehicle is at least $10,000/kg. As a result, the propellant cost of flying into low earth orbit is basically negligible.

The incredibly high dry mass costs are not necessarily because the raw material, predominantly high-grade aerospace metals, are prohibitively expense, rather they cannot be bought at scale because of the limited number of rockets being manufactured. Second, the criticality of reducing structural mass for maximising delta-v means that very tight safety factors are employed. Operating a tight safety factor design philosophy while ensuring sufficient safety and reliability standards under the extreme load conditions exerted on the rocket means that manufacturing standards and quality control measures are by necessity state-of-the-art. Such procedures are often highly specialised technologies that significantly drive up costs.

To clear these economic hurdles, some have proposed to manufacture simple expendable rockets at scale, while others are focusing on reusable rockets. The former approach will likely only work for unmanned smaller rockets and is being pursued by companies such as Rocket Lab Ltd. The Space Shuttle was an attempt at the latter approach that did not live up to its potential. The servicing costs associated with the reusable heat shield were unexpectedly high and ultimately forced the retirement of the Shuttle. Most, recently Elon Musk and SpaceX have picked up the ball and have successfully designed a fully reusable first stage.

The principles outlined above set the landscape of what type of rocket we want to design. Ideally, a high specific impulse chemicals suspended in a lightweight yet strong tankage structure above an efficient means of combustion.

Some of the more detailed questions rocket engineers are faced with are:

- What propellants to use to do the job most efficiently and at the lowest cost?

- How to expel and direct the exhaust gases most efficiently?

- How to control the reaction safely?

- How to minimise the mass of the structure?

- How to control the attitude and accuracy of the rocket?

We will address these questions in the next part of this series.

References

[1] Rolls-Royce plc (1996). The Jet Engine. Fifth Edition. Derby, England.

Rocket technology has evolved for more than 2000 years. Today’s rockets are a product of a long tradition of ingenuity and experimentation, and combine technical expertise from a wide array of engineering disciplines. Very few, if any, of humanity’s inventions are designed to withstand equally extreme conditions. Rockets are subjected to awesome g-forces at lift-off, and experience extreme hot spots in places where aerodynamic friction acts most strongly, and extreme cold due to liquid hydrogen/oxygen at cryogenic temperatures. Operating a rocket is a balance act, and the line between a successful launch and catastrophic blow-out is often razor thin. No other engineering system rivals the complexity and hierarchy of technologies that need to interface seamlessly to guarantee sustained operation. It is no coincidence that “rocket science” is the quintessential cliché to describe the mind-blowingly complicated.

Fortunately for us, we live in a time where rocketry is undergoing another golden period. Commercial rocket companies like SpaceX and Blue Origin are breathing fresh air into an industry that has traditionally been dominated by government-funded space programs. But even the incumbent companies are not resting on their laurels, and are developing new powerful rockets for deep-space exploration and missions to Mars. Recent blockbuster movies such as Gravity, Interstellar and The Martian are an indication that space adventures are once again stirring the imagination of the public.

What better time than now to look back at the past 2000 years of rocketry, investigate where past innovation has taken us and look ahead to what is on the horizon? It’s certainly impossible to cover all of the 51 influential rockets in the chart below but I will try my best to provide a broad brush stroke of the early beginnings in China to the Space Race and beyond.

51 influential rockets ordered by height. Created by Tyler Skrabek

The history of rocketry can be loosely split into two eras. First, early pre-scientific tinkering and second, the post-Enlightenment scientific approach. The underlying principle of rocket propulsion has largely remained the same, whereas the detailed means of operation and our approach to developing rocketry has changed a great deal.

The fundamental principle of rocket propulsion, spewing hot gases through a nozzle to induce motion in the opposite direction, is nicely illustrated by two historic examples. The Roman writer Aulus Gellius tells a story of Archytas, who, sometime around 400 BC, built a flying pigeon out of wood. The pigeon was held aloft by a jet of steam or compressed air escaping through a nozzle. Three centuries later, Hero of Alexandria invented the aeolipile based on the same principle of using escaping steam as a propulsive fluid. In the aeolipile, a hollow sphere was connected to a water bath via tubing, which also served as a primitive type of bearing, suspending the sphere in mid-air. A fire beneath the water basin created steam which was subsequently forced to flow into the sphere via the connected tubing. The only way for the gas to escape was through two L-shaped outlets pointing in opposite directions. The escaping steam induced a moment about the hinged support effectively rotating the sphere about its axis.In both these examples, the motion of the device is governed by the conservation of momentum. When the rocket and internal gases are moving as one unit, the overall momentum, the product of mass and velocity, is equal to . Thus for a total mass of rocket and gas,

, moving at velocity

As the gases are expelled through the rear of the rocket, the overall momentum of the rocket and fuel has to remain constant as long as no external forces are acting on the system. Thus, if a very small amount of gas is expelled at velocity

relative to the rocket (either in the direction of

or in the opposite direction), the overall momentum of the system is

As has to equal

to conserve momentum

and by isolating the change in rocket velocity

The negative sign in the equation above indicates that the rocket always changes velocity in the opposite direction of the expelled gas. Hence, if the gas is expelled in the opposite direction of the motion (i.e.

is negative), then the change in the rocket velocity will be positive (i.e. it will accelerate).

At any time the quantity

is equal to the residual mass of the rocket (dry mass + propellant) and

denotes it change. If we assume that the expelled velocity of the gas remains constant throughout, we can easily integrate the above expression to find the incremental change in velocity as the total rocket mass (dry mass + propellant) changes from an intial mass

to a final mass

. Hence,

This equation is known as the Tsiolkovsky rocket equation (more on him later) and is applicable to any body that accelerates by expelling part of its mass at a specific velocity.

Often, we are more interested in the thrust created by the rocket and its associated acceleration . Hence, by dividing the equation for

by a small time increment

and the associated thrust acting on the rocket is

where is the mass flow rate of gas exiting the rocket. This simple equation captures the fundamental physics of rocket propulsion. A rocket creates thrust either by expelling more of its mass at a higher rate (

) or by increasing the velocity at which the mass is expelled. In the ideal case that’s it! (So by idealised we mean constant

and no external forces, e.g. aerodynamic drag in the atmosphere or gravity. In actual calculations of the required propellant mass these forces and other efficiency reducing factors have to be included.)

A plot of the rocket equation highlights one of the most pernicious conundrums of rocketry: The amount of fuel required (i.e. the mass ratio ) to accelerate the rocket through a velocity change

at a fixed effective escape velocity

increases exponentially as we increase the demand for greater

. As the cost of a rocket is closely related to its mass, this explains why it is so expensive to propel anything of meaningful size into orbit (

28,800 km/hr (18,000 mph) for low-earth orbit).

The exponential increase of fuel mass required to accelerate a rocket through a specific velocity change

The first documented use of such a “true” rocket was during the battle of Kai-Keng between the Chinese and Mongols in 1232. During this battle the Chinese managed to hold the Mongols at bay using a primitive form a solid-fueled rocket. A hollow tube was capped at one end, filled with gunpowder and then attached to a long stick. The ignition of the gunpowder increased the pressure inside the hollow tube and forced some of the hot gas and smoke out through the open end. As governed by the law of conservation of momentum, this creates thrust to propel the rocket in the direction of the capped end of the tube, with the long stick acting as a primitive guidance system, very much reminiscent of the firework “rockets” we use today.

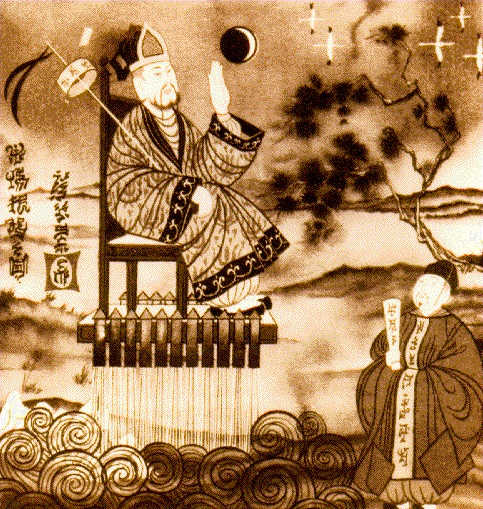

According to a Chinese legend, Wan Hu, a local official during the 16th century Ming dynasty, constructed a chair with 47 gunpowder bamboo rockets attached, and in some versions of the legend supposedly fitted kite wings as well. The rocket chair was launched by igniting all 47 bamboo rockets simultaneously, and apparently, after the commotion was over, Wan Hu was gone. Some say he made it into space, and is now the “Man in the Moon”. Most likely, Wan Hu suffered the first ever launch pad failure.

One theory is that rockets were brought to Europe via the 13th cetnury Mongol conquests. In England, Roger Bacon developed a more powerful gunpowder (75% saltpetre, 15% carbon and 10% sulfur) that increased the range of rockets, while Jean Froissart added a launch pad by launching rockets through tubes to improve aiming accuracy. By the Renaissance, the use of rockets for weaponry fell out of fashion and experimentation with fireworks increased instead. In the late 16th century, a German tinkerer, Johann Schmidlap, experimented with staged rockets, an idea that is the basis for all modern rockets. Schmidlap fitted a smaller second-stage rocket on top of a larger first-stage rocket, and once the first stage burned out, the second stage continued to propel the rocket to higher altitudes. At about the same time, Kazimierz Siemienowicz, a Polish-Lithuanian commander in the Polish Army published a manuscript that included a design for multi-stage rockets and delta-wing stabilisers that were intended to replace the long rods currently acting as stabilisers.

The scientific method meets rocketry

The scientific groundwork of rocketry was laid during the Enlightenment by none other than Sir Isaac Newton. His three laws of motion,

1) In a particular reference frame, a body will stay in a state of constant velocity (moving or at rest) unless a net force is acting on the body

2) The net force acting on a body causes an acceleration that is proportional to the body’s inertia (mass), i.e. F=ma

3) A force exerted by one body on another induces an equal an opposite reaction force on the first body

are known to every student of basic physics. In fact, these three laws were probably intuitively understood by early rocket designers, but by formalising the principles, they were consciously being used as design guidelines. The first law explains why rockets move at all. Without creating propulsive thrust the rocket will remain stationary. The second quantifies the amount of thrust produced by a rocket at a specific instant in time, i.e. for a specific mass . (Note, Newton’s second law is only valid for constant mass systems and is therefore not equivalent to the conservation of momentum approach described above. When mass varies, an equation that explicitly accounts for the changing mass has to be used.) The third law explains that due to the expulsion of mass, in re-action a thrusting force is produced on rocket.

In the 1720s, at around the time of Newton’s death, researchers in the Netherlands, Germany and Russia started to use Newton’s laws as tools in the design of rockets. The dutch professor Willem Gravesande built rocket-propelled cars by forcing steam through a nozzle. In Germany and Russia rocket designers started to experiment with larger rockets. These rockets were powerful enough that the hot exhaust flames burnt deep holes into the ground before launching. The British colonial wars of 1792 and 1799 saw the use of Indian rocket fire against the British army. Hyder Ali and his son Tipu Sultan, the rulers of the Kingdom of Mysore in India, developed the first iron-cased rockets in 1792 and then used it against the British in the Anglo-Mysore Wars.

Casing the propellant in iron, which extended range and thrust, was more advanced technology than anything the British had seen until then, and inspired by this technology, the British Colonel William Congreve began to design his own rocket for the British forces. Congreve developed a new propellant mixture and fitted an iron tube with a conical nose to improve aerodynamics. Congreve’s rockets had an operational range of up to 5 km and were successfully used by the British in the Napoleonic Wars and launched from ships to attack Fort McHenry in the War of 1812. Congreve created both carbine ball-filled rockets to be used against land targets, and incendiary rockets to be used against ships. However, even Congreve’s rockets could not significantly improve on the main shortcomings of rockets: accuracy.

At the time, the effectiveness of rockets as a weapon was not their accuracy or explosive power, but rather the sheer number that could be fired simultaneously at the enemy. The Congreve rockets had managed some form of basic attitude control by attaching a long stick to the explosive, but the rockets had a tendency to veer sharply off course. In 1844, a British designer, William Hale developed spin stabilisation, now commonly used in gun barrels, which removed the need for the rocket stick. William Hale forced the escaping exhaust gases at the rear of the rocket to impinge on small vanes, causing the rocket to spin and stabilise (the same reason that a gyroscope remains upright when spun on a table top). The use of rockets in war soon took a back seat once again when the Prussian army developed the breech-loading cannon with exploding warheads that proved far superior than the best rockets.

The era of modern rocketry

Soon, new applications for rockets were being imagined. Jules Verne, always the visionary, put the dream of space flight into words in his science-fiction novel “De la Terre á la Lune” (From the Earth to the Moon), in which a projectile, named Columbiad, carrying three passengers is shot at the moon using a giant cannon. The Russian schoolteacher Konstantin Tsiolkovsky (of rocket equation fame) proposed the idea of using rockets as a vehicle for space exploration but acknowledged that the main bottlenecks of achieving such a feat would require significant developments in the range of rockets. Tsiolkovsky understood that the speed and range of rockets was limited by the exhaust velocity of the propellant gases. In a 1903 report, “Research into Interplanetary Space by Means of Rocket Power”, he suggested the use of liquid-propellants and formalised the rocket equation derived above, relating the rocket engine exhaust velocity to the change in velocity of the rocket itself (now known as the Tsiolkovsky rocket equation in his honour, although it had already been discovered previously).

Tsiolkovsky also advocated the development of orbital space stations, solar energy and the colonisation of the Solar System. One of his quotes is particularly prescient considering Elon Musk’s plans to colonise Mars:

“The Earth is the cradle of humanity, but one cannot live in the cradle forever” — In a letter written by Tsiolkovsky in 1911.

The American scientist Robert H. Goddard, now known as the father of modern rocketry, was equally interested in extending the range of rockets, especially reaching higher altitudes than the gas balloons used at the time. In 1919 he published a short manuscript entitled “A Method of Reaching Extreme Altitudes” that summarised his mathematical analysis and practical experiments in designing high altitude rockets. Goddard proposed three ways of improving current solid-fuel technology. First, combustion should be contained to a small chamber such that the fuel container would be subjected to much lower pressure. Second, Goddard advocated the use of multi-stage rockets to extend their range, and third, he suggested the use of a supersonic de Laval nozzle to improve the exhaust speed of the hot gases.

Goddard started to experiment with solid-fuel rockets, trying various different compounds and measuring the velocity of the exhaust gases. As a result of this work, Goddard was convinced of Tsiolkovsky’s early premonitions that a liquid-propellant would work better. The problem that Goddard faced was that liquid-propellant rockets were an entirely new field of research, no one had ever built one, and the system required was much more complex than for a solid-fuelled rocket. Such a rocket would need separate tanks and pumps for the fuel and oxidiser, a combustion chamber to combine and ignite the two, and a turbine to drive the pumps (much like the turbine in a jet engine drives the compressor at the front). Goddard also added a de Laval nozzle which cooled the hot exhaust gases into a hypersonic, highly directed jet, more than doubling the thrust and increasing engine efficiency from 2% to 64%! Despite these technical challenges, Goddard designed the first successful liquid-fuelled rocket, propelled by a combination of gasoline as fuel and liquid oxygen as oxidiser, and tested it on March 16, 1926. The rocket remained lit for 2.5 seconds and reached an altitude of 12.5 meters. Just like the first 40 yard flight of the Wright brothers in 1903, this feat seems unimpressive by today’s standards, but Goddard’s achievements put rocketry on an exponential growth curve that led to radical improvements over the next 40 years. Goddard himself continued to innovate; his rockets flew to higher and higher altitudes, he added a gyroscope system for flight control and introduced parachute recovery systems.

On the other side of the Atlantic, German scientists were beginning to play a major role in the development of rockets. Inspired by Hermann Oberth’s ideas on rocket travel, the mathematics of spaceflight and the practical design of rockets published in his book “Die Rakete zu den Planetenraumen” (The Rocket to Space), a number of rocket societies and research institutes were founded in Germany. The German bicycle and car manufacturer Opel (now part of GM) began developing rocket powered cars, and in 1928 Fritz von Opel drove the Opel-RAK.1 on a racetrack. In 1929 this design was extended to the Opel-Sander RAK 1-airplane, which crashed during its first flight in Frankfurt. In the Soviet Union, the Gas dynamics Laboratory in Leningrad under the directorship of Valentin Glushko built more than 100 different engine designs, experimenting with different fuel injection techniques.

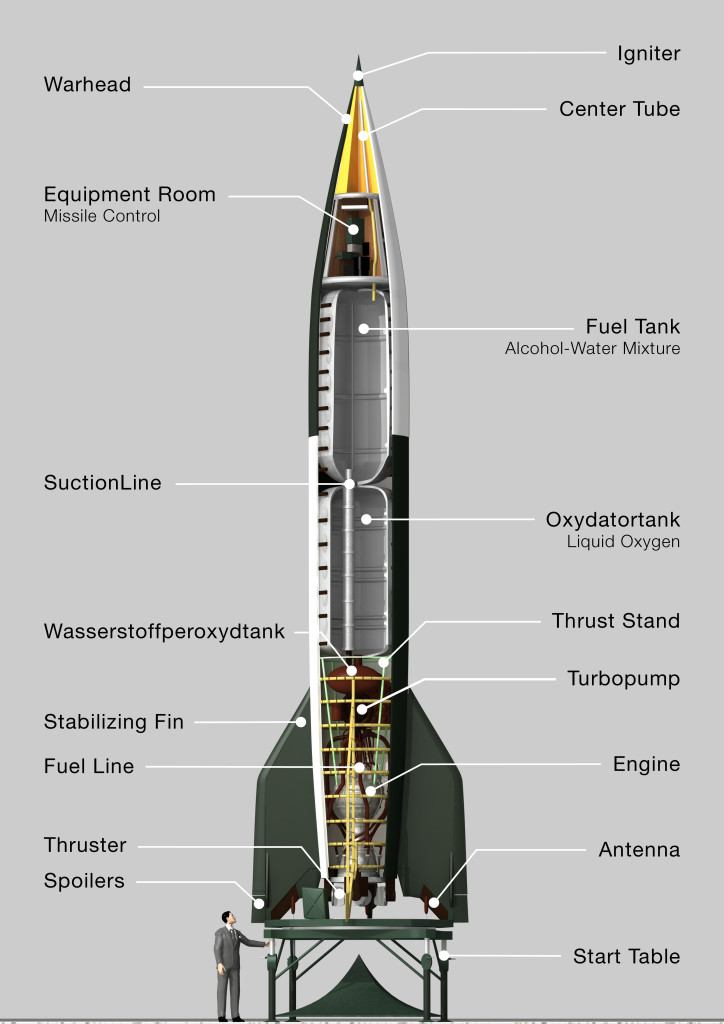

Under the directorship of Wernher von Braun and Walter Dornberger, the Verein for Raumschiffahrt or Society for Space Travel played a pivotal role in the development of the Vergeltungswaffe 2, also known as the V-2 rocket, the most advanced rocket of its time. The V-2 rocket burned a mixture of alcohol as fuel and liquid oxygen as oxidiser, and it achieved great amounts of thrust by considerably improving the mass flow rate of fuel to about 150 kg (380 lb) per second. The V-2 featured much of the technology we see on rockets today, such as turbo pumps and guidance systems, and due to its range of around 300 km (190 miles), the V-2 could be launched from the shores of the Baltic to bomb London during WWII. The 1000 kg (2200 lb) explosive warhead fitted in the tip of the V-2 was capable of devastating entire city blocks, but still lacked the accuracy to reliably hit specific targets. Towards the end of WWII, German scientists were already planning much larger rockets, today known as Intercontinental Ballistic Missiles (ICBMs), that could be used to attack the United States, and were strapping rockets to aircraft either for powering them or for vertical take-off.

With the fall of the Third Reich in April 1945 a lot of this technology fell into the hands of the Allies. The Allies’ rocket program was much less sophisticated such that a race ensued to capture as much of the German technology as possible. The Americans alone captured 300 train loads of V-2 rocket parts and shipped them back to the United States. Furthermore, the most prominent of the German rocket scientists emigrated to the United States, partly due to the much better opportunities to develop rocketry there, and partly to escape the repercussions of having played a role in the Nazi war machine. The V-2 essentially evolved into the American Redstone rocket which was used during the Mercury project.

The Space Race – to the moon and beyond

After WWII both the United States and the Soviet Union began heavily funding research into ICBMs, partly because these had the potential to carry nuclear warheads over long distances, and partly due to the allure of being the first to travel to space. In 1948, the US Army combined a captured V-2 rocket with a WAC Corporal rocket to build the largest two-stage rocket to be launched in the United States. This two-stage rocket was known as the “Bumper-WAC”, and over course of six flights reached a peak altitude of 400 kilometres (250 miles), pretty much exactly to the altitude where the International Space Station (ISS) orbits today.

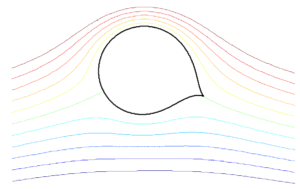

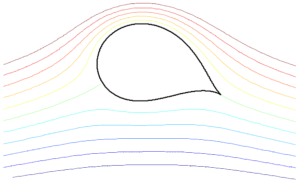

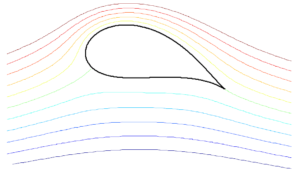

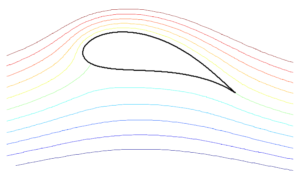

Despite these developments the Soviets were the first to put a man-made object orbit into space, i.e. an artificial satellite. Under the leadership of chief designer Sergei Korolev, the V-2 was copied and then improved upon in the R-1, R-2 and R-5 missiles. At the turn of 1950s the German designs were abandoned and replaced with the inventions of Aleksei Mikhailovich Isaev which was used as the basis for the first Soviet ICBM, the R-7. The R-7 was further developed into the Vostok rocket which launched the first satellite, Sputnik I, into orbit on October 4, 1957, a mere 12 years after the end of WWII. The launch of Sputnik I was the first major news story of the space race. Only a couple of weeks later the Soviets successfully launched Sputnik II into orbit with dog Laika onboard.One of the problems that the Soviets did not solve was atmospheric re-entry. Any object wishing to orbit another planet requires enough speed such that the gravitational attraction towards the planet is offset by the curvature of planet’s surface. However, during re-entry, this causes the orbiting body to literally smash into the atmosphere creating incredible amounts of heat. In 1951, H.J. Allen and A.J. Eggers discovered that a high drag, blunted shape, not a low-drag tear drop, counter-intuitively minimises the re-entry effects by redirecting 99% of the energy into the surrounding atmosphere. Allen and Eggers’ findings were published in 1958 and were used in the Mercury, Gemini, Apollo and Soyuz manned space capsules. This design was later improved upon in the Space Shuttle, whereby a shock wave was induced on the heat shield of the Space Shuttle via an extremely high angle of attack, in order to deflect most of the heat away from the heat shield.

The United States’ first satellite, Explorer I, would not follow until January 31, 1958. Explorer I weighed about 30 times less than the Sputnik II satellite, but the Geiger radiation counters on the satellite were used to make the first scientific discovery in outer space, the Van Allen Radiation Belts. Explorer I had originally been developed as part of the US Army, and in October 1958 the National Advisory Committee for Aeronautics (NACA, now NASA) was officially formed to oversee the space program. Simultaneously, the Soviets developed the Vostok, Soyuz and Proton family of rockets from the original R-7 ICBM to be used for the human spaceflight programme. In fact, the Soyuz rocket is still being used today, is the most frequently used and reliable rocket system in history, and after the Space Shuttle’s retirement in 2011 became the only viable means of transport to the ISS. Similarly, the Proton rocket, also developed in the 1960s, is still being used to haul heavier cargo into low-Earth orbit.

Shortly after these initial satellite launches, NASA developed the experimental X-15 air-launched rocket-propelled aircraft, which, in 199 flights between 1959 and 1968, broke numerous flying records, including new records for speed (7,274 kmh or 4,520 mph) and altitude records (108 kmh or 67 miles). The X-15 also provided NASA with data regarding the optimal re-entry angles from space into the atmosphere.

The next milestone in the space race once again belonged to the Soviets. On April 12, 1961, the cosmonaut Yuri Gagarin became the first human to travel into space, and as a result became an international celebrity. Over a period of just under two hours, Gagarin orbited the Earth inside a Vostok 1 space capsule at around 300 km (190 miles) altitude, and after re-entry into the atmosphere ejected at an altitude of 6 km (20,000 feet) and parachuted to the ground. At this point Gagarin became the most famous Soviet on the planet, travelling around the world as a beacon of Soviet success and superiority over the West.

Shortly after Gagarin’s successful flight, the American astronaut Alan Shepherd reached a suborbital altitude of 187 km (116 miles) in the Freedom 7 Mercury capsule. The Redstone ICBM that was used to launch Shephard from Cape Caneveral did not quite have the power to send the Mercury capsule into orbit, and had suffered a series of emberrassing failures prior to the launch, increasing the pressure on the US rocket engineers. However, days after Shephard’s flight, President John F. Kennedy delivered the now famous words before a joint session in Congress

“This nation should commit itself to achieving the goal, before this decade is out, of landing a man on the Moon and returning him safely to the Earth.”

Despite the bold nature of this challenge, NASA’s Mercury project was already well underway in developing the technology to put the first human on the moon. In February 1962, the more powerful Atlas missile propelled John Glenn into orbit, and thereby restored some form of parity between the USA and the Soviet Union. The last of the Mercury flights were scheduled for 1963 with Gordon Cooper orbiting the Earth for nearly 1.5 days. The family of Atlas rockets remains one of the most successful to this day. Apart from launching a number of astronauts into space during the Mercury project, the Atlas has been used for bringing commercial, scientific and military satellites into orbit.

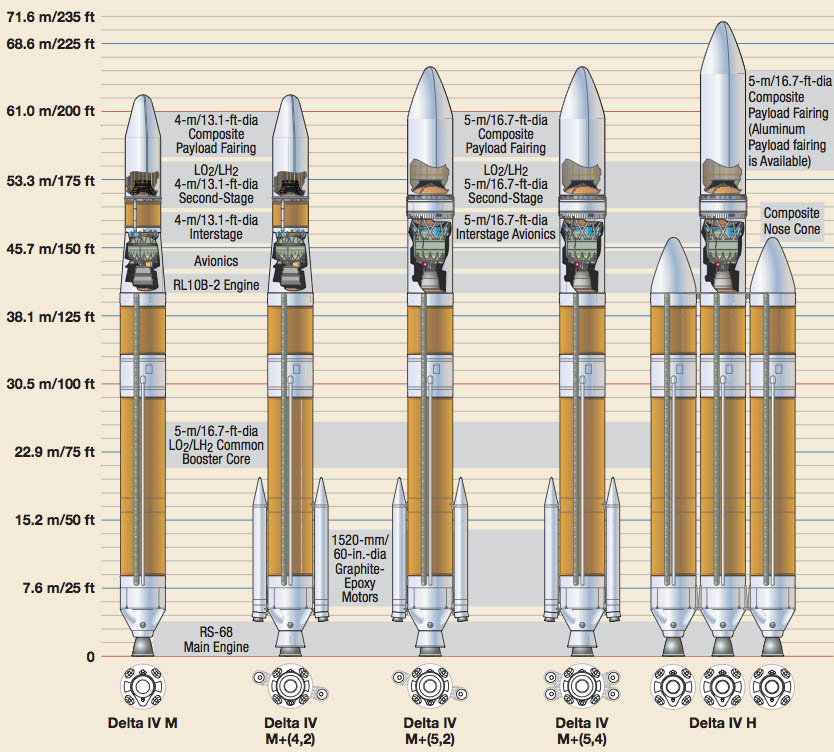

Following the Mercury missions, the Gemini project made significant strides towards a successful Moon flight. The Gemini capsule was propelled by an even more power ICBM, the Titan, and allowed astronauts to remain in space for up to two weeks, during which astronauts had the first experience with space-walking, and rendezvous and docking procedures with the Gemini spacecraft. An incredible ten Gemini missions were flown throughout 1965-66. The high success rate of the missions was testament to the improving reliability of NASA’s rockets and spacecraft, and allowed NASA engineers to collect invaluable data for the coming Apollo Moon missions. The Titan missile itself, remains as one of the most successful and long-lived rockets (1959-2005), carrying the Viking spacecraft to Mars, the Voyager probe to the outer solar system, and multiple heavy satellites into orbit. At about the same time, around the early 1960s, an entire family of versatile rockets, the Delta family, was being developed. The Delta family became the workhorse of the US space programme achieving more than 300 launches with a reliability greater than 95% percent! The versatility of the Delta family was based on the ability to tailor the lifting capability, using different interchangeable stages and external boosters that could be added for heavier lifting.

At this point, the tide had mostly turned. The United States had been off to a slow start but had used the data from their early failures to improve the design and reliability of their rockets. The Soviets, while being more successful initially, could not achieve the same rate of launch success and this significantly hampered their efforts during the upcoming race to the moon.

The Delta 4 rocket family (Photo Credit: United Launch Alliance)

To get to the moon, a much more powerful rocket than the Titan or Delta rockets would be needed. This now infamous rocket, the 110.6 m (330 feet) tall Saturn V (check out this sick drawing), consisted of three separate main rocket stages; the Apollo capsule with a small fourth propulsion stage for the return trip; and a two-staged lunar lander, with one stage for descending onto the Moon’s surface and the other for lifting back off the Moon. The Saturn V was largely the brainchild and crowning achievement of Wernher von Braun, the original lead developer of the V-2 rocket in WWII Germany, with a capability of launching 140,000 kg (310,000 lb) into low-Earth orbit and 48,600 kg (107,100 lb) to the Moon. This launch capability dwarfed all previous rockets and to this day remains the tallest, heaviest and most powerful rocket ever built to operational flying status (last on the chart at the start of the piece). NASA’s efforts reached their glorious climax with the Apollo 11 mission on July 20, 1969 when astronaut Neil Armstrong became the first man to set foot on the Moon, a mere 11.5 years after the first successful launch of the Explorer I satellite. The Apollo 11 mission became the first of six successful Moon landings throughout the years 1969-1972. A smaller version of the moon rocket, the Saturn IB, was also developed and used for some of the early Apollo test missions and later to transport three crews to the US space station Skylab.

The Space Shuttle

NASA’s final major innovation was the Space Shuttle. The idea behind the Space Shuttle was to design a reusable rocket system for carrying crew and payload into low-Earth orbit. The rationale behind this idea is that manufacturing the rocket hardware is a major contributor to the overall launch costs, and that allowing different stages to be destroyed after launch is not cost effective. Imagine having to throw away your Boeing 747 or Airbus A380 every time you fly from London to New York. In this case ticket prices would not be where they are now. The Shuttle consisted of a winged airplane-looking spacecraft that was boosted into orbit by liquid-propellant engines on the Shuttle itself, fuelled from a massive orange external tank, and two solid rocket booster attached to either side. After launch, the solid-rocket boosters and external fuel tank were jettisoned, and the boosters recovered for future use. At the end of a Shuttle mission, the orbiter re-entered Earth’s atmosphere, and then followed a tortuous zig-zag course, gliding unpowered to land on a runway like any other aircraft. Ideally NASA promised that the Shuttle was going to reduce launch costs by 90%. However, crash landings of the solid rocket boosters in water often damaged them beyond repair, and the effort required to service the orbiter heat shield, inspecting each of the 24,300 unique tiles separately, ultimately led to the cost of putting a kilogram of payload in orbit to be greater than for the Saturn V rocket that preceded it. The five Shuttles, the Endeavour, Discovery, Challenger, Columbia and Atlantis, completed 135 missions between 1981 and 2011 with the tragic loss of the Challenger in 1983 and the Columbia in 2003. While the Shuttle facilitated the construction of the International Space Station and the installation of the Hubble space telescope in orbit, the ultimate goal of economically sustainable space travel was never achieved.However, this goal is now on the agenda of commercial space companies such as SpaceX, Reaction Engines, Blue Origin, Rocket Lab and the Sierra Nevada Corporation.

New approaches

After the demise of the Space Shuttle programme in 2011, the US’ capability of launching humans into space was heavily restricted. NASA is currently working on a new Space Launch System (SLS), the aim of which is to extend NASA’s range beyond low-Earth orbit and further out into the Solar system. Although the SLS is being designed and assembled by NASA, other partners such as Boeing, United Launch Alliance, Orbital ATK and Aerojet Rocketdyne are co-developing individual components. The SLS specification as it stands would make it the most powerful rocket in history and the SLS is therefore being developed in two stages (reminiscent of the Saturn IB and Saturn V rocket). First, a rocket with a payload capability of 70 metric tons (175,000 lb) is being developed from components of previous rockets. The goal of this heritage SLS is to conduct two lunar flybys with the Orion spacecraft, one unmanned and the other with a crew. Second, a more advanced version of the SLS with a payload capability of 130 metric tons (290,000 lb) to low-earth orbit, about the same payload capacity and 20% more thrust than the Saturn V rocket, is deemed to carry scientific equipment, cargo and the manned Orion capsule into deep space. The first flight for an unmanned Orion capsule on a trip around the moon is planned for 2018, while manned missions are expected by 2021-2023. By 2026 NASA plans to send a manned Orion capsule to an asteroid previously placed into lunar orbit by a robotic “capture-and-place” mission.

However, with the commercialisation of space travel new incumbents are now working on even more daunting goals. The SpaceX Falcon 9 rocket has proven to be a very reliable launch system (with a current success rate of 20 out of 22 launches). Furthemore, SpaceX was the first private company to successfully launch and recover an orbital spacecraft, the Dragon capsule, which regularly supplies the ISS with supplies and new scientific equipment. Currently, the US relies on the Russian Soyuz rocket to bring astronauts to the ISS but in the near future manned missions are planned with the Dragon capsule. The Falcon 9 rocket is a two-stage-to-orbit launch vehicle comprised of nice SpaceX Merlin rocket engines fuelled by liquid oxygen and kerosene with a payload capacity of 13 metric tons (29,000 lb) into low-Earth orbit. There have been three versions of the Falcon 9, v1.0 (retired), v1.1 (retired) and most recently the partially reusable full thrust version, which on December 22, 2015 used propulsive recovery to land the first stage safely in Cape Canaveral. To date, efforts are being made to extend the landing capabilities from land to sea barges. Furthermore, the Falcon Heavy with 27 Merlin engines (a central Falcon 9 rocket with two Falcon 9 first stages strapped to the sides) is expected to extend SpaceX’s lifting capacity to 53 metric tons into low-Earth orbit, making it the second most powerful rocket in use after NASA’s SLS. First flights of the Falcon Heavy are expected for late this year (2016). Of course, the ultimate goal of SpaceX’s CEO Elon Musk, is to make humans a multi planetary species, and to achieve this he is planning to send a colony of a million humans to Mars via the Mars Colonial Transporter, a space launch system of reusable rocket engines, launch vehicles and space capsules. SpaceX’s Falcon 9 rocket already has the lowest launch costs at $60 million per launch, but reliable re-usability should bring these costs down over the next decade such that a flight ticket to Mars could become enticing for at least a million of the richest people on Earth (or perhaps we could sell spots on “Mars – A Reality TV show“).Blue Origin, the rocket company of Amazon founder Jeff Bezos, is taking a similar approach of vertical takeoff and landing to re-usability and lower launch costs. The company is on an incremental trajectory to extend its capabilities from suborbital to orbital flight, led by its motto “Gradatim Ferocity” (latin for step by step, ferociously). Blue Origin’s New Shepard rocket underwent its first test flight in April 2015. In November 2015 the rocket landed successfully after a suborbital flight to 100 km (330,000 ft) altitude and this was extended to 101 km (333,000 ft) in January 2016. Blue hopes to extend its capabilities to human spaceflight by 2018.

Reaction Engines is a British aerospace company conducting research into space propulsion systems focused on the Skylon reusable single-stage-to-orbit spaceplane. The Skylon would be powered by the SABRE engine, a rocket-based combined cycle, i.e. a combination of an air-breathing jet engine and a rocket engine, whereby both engines share the same flow path, reusable for about 200 flights. Reaction Engines believes that with this system the cost of carrying one kg (2.2 lb) of payload into low-earth orbit can be reduced from the $1,500 today (early 2016) to around $900. The hydrogen-fuelled Skylon is designed to take-off from a purpose built runway and accelerate to Mach 5 at 28.5 km (85,500 feet) altitude using the atmosphere’s oxygen as oxidiser. This air-breathing part of the SABRE engine works on the same principles as a jet engine. A turbo-compressor is used to raise the pressure ratio of the incoming atmospheric air, which is pre-staged by a pre-cooler to cool the hot air impinging on the engine at hypersonic speeds. The compressed air is fed into a rocket combustion chamber where it is ignited with liquid hydrogen. As in a standard jet engine, a high pressure ratio is crucial to pack as much of the oxidiser into the combustion chamber and increase the thrust of the engine. As the natural source of oxygen runs out at high altitude, the engines switch to the internally stored liquid oxygen supplies, transforming the engine into a closed-cycle rocket and propelling the Skylon spacecraft into orbit. The theoretical advantages of the SABRE engine is its high fuel efficiency and low mass, which facilitate the single-stage-to-orbit approach. Reminiscent of the Shuttle, after deploying the its payload of up to 15 tons (38,000 lb), the Skylon spacecraft would then re-enter the atmosphere protected by a heat shield and land on a runway. The first ground tests of the SABRE engine are planned for 2019 and first unmanned test flights are expected for 2025.

Sierra Nevada Corporation is working alongside NASA to develop the Dream Chaser spacecraft for transporting cargo and up to seven people to low-earth orbit. The Dream Chaser is designed to launch on top of the Atlas V rocket (in place of the nose cone) and land conventionally by gliding onto a runway. The Dream Chaser looks a lot like a smaller version of the Space Shuttle, so intuitively one would expect the same cost inefficiencies as for the Shuttle. However, the engineers at Sierra Nevada say that two changes have been made to the Dream Chaser that should reduce the maintenance costs. First, the thrusters used for attitude control are ethanol-based, and therefore not toxic and a lot less volatile than the hydrazine-based thursters used by the Shuttle. This should allow maintenance of the Dream Chaser to ensue immediately after landing and reduce the time between flights. Second, the thermal protection system is based on an ablative tile that can survive multiple flights and can be replaced in larger groups rather than tile-by-tile. The Dream Chaser is planned to undergo orbital test flights in November 2016.

Finally, the New Zealand-based firm Rocket Lab is developing the all-carbon composite liquid-fuelled Electron rocket with a payload capability to low-Earth orbit of 110 kg (240 lb). Thus, Rocket Lab is focusing on high-frequency rocket launches to transport low-mass payload, e.g. nano satellites, into orbit. The goal of Rocket Lab is to make access to space frequent and affordable such that the rapidly evolving small-scale satellites that provide us with scientific measurements and high-speed internet can be launched reliably and quickly. The Rocket Lab system is designed to cost $5 million per launch at 100 launches a year and use less fuel than a flight on a Boeing 737 from San Francisco to Los Angeles. A special challenge that Rocket Lab is facing is the development of the all-carbon composite liquid oxygen tanks to provide the mass efficiency required for this high fuel efficiency. To date the containment of cryogenic (super cold) liquid fuels, such as liquid hydrogen and liquid oxygen, is still the domain of metallic alloys. Concerns still exist about potential leaks due to micro cracks developing in the resin of the composite at cryogenic temperatures. In composites, there is a mismatch between the thermal expansion coefficients of the reinforcing fibre and the resin, which induces thermal stresses as the composite is cooled to cryogenic temperatures from its high temperature/high pressure curing process. The temperature and pressure cycles during the liquid oxygen/hydrogen fill-and-drain procedures then induces extra fatigue loading that can lead to cracks permeating through the structure through which hydrogen or oxygen molecules can easily pass. This leaking process poses a real problem for explosion.Where do we go from here?

As we have seen, over the last 2000 years rockets have evolved from simple toys and military weapons to complex machines capable of transporting humans into space. To date, rockets are the only viable gateway to places beyond Earth. Furthermore, we have seen that the development of rockets has not always followed a uni-directional path towards improvement. Our capability to send heavier and heavier payloads into space peaked with the development of the Saturn V rocket. This great technological leap was fuelled, to a large extent, by the competitive spirit of the Soviet Union and the United States. Unprecedented funds were available to rocket scientists on both sides during the 1950-1970s. Furthermore, dreamers and visionaries such as Jules Verne, Konstantin Tsiolkovsky and Gene Roddenberry sparked the imagination of the public and garnered support for the space programs. After the 2003 Columbia disaster, public support for spending taxpayer money on often over-budget programs understandably waned. However, the successes of incumbent companies, their fierce competition and visionary goals of colonising Mars are once again inspiring a younger generation. This is, once again, an exciting time for rocketry.

Sources

Tom Benson (2014). Brief History of Rockets. NASA URL: https://www.grc.nasa.gov/www/k-12/TRC/Rockets/history_of_rockets.html

NASA. A Pictorial History of Rockets. URL: https://www.nasa.gov/pdf/153410main_Rockets_History.pdf

John Partridge is the founder of the deap-sea instrumentation company Sonardyne, and also graduated from the University of Bristol, my alma mater, with a degree in Mechanical Engineering in 1962. Since the founding in 1971, Sonardyne has developed into one of the leading instrumentation companies in oceanography, oil drilling, underwater monitoring and tsunami warning systems.

During my PhD graduation ceremony last week John Partridge received an honorary doctorate in engineering for his contributions to the field. His acceptance speech was shorter than most but packed a punch. Among others, he discussed the current state of engineering progress, the three essential characteristics an engineer should possess and his interests in engineering education.