Martin Eichenhofer is the CEO & co-founder of 9T Labs, a company that was spun out of ETH Zürich in Switzerland. The company specialises in providing software solutions and manufacturing equipment for producing high-quality and high-performance composite materials using 3D printing.

Martin Eichenhofer is the CEO & co-founder of 9T Labs, a company that was spun out of ETH Zürich in Switzerland. The company specialises in providing software solutions and manufacturing equipment for producing high-quality and high-performance composite materials using 3D printing.

By marrying the worlds of composite materials and 3D printing, 9T Labs is taking advantage of the superior material properties of composite materials and combining these with the geometric fidelity facilitated by 3D printing. As a result, components that were previously unfeasible to be manufactured using composite materials, either from a technical or cost perspective, are now within the realm of the possible.

What is unique about 9T Labs is that the company combines their hardware for 3D printing composite parts with a bespoke optimisation software in order to maximise a component’s performance, both in terms of structural design and manufacturing quality. Furthermore, it has been historically difficult to print continuous fibre composites at high quality with a low void content. 9T Labs, however, has patented a process that allows printing at a void content of below 1%, which competes with conventionally manufactured composites.

In this episode of the Aerospace Engineering Podcast, Martin and I discuss:

- his background as an engineer and how his PhD research led to 9T Labs

- the challenges and benefits of 3D printing composite materials

- 9T Labs’ unique approach to 3D printing composite materials

- some of the applications the company is currently working on

- and much, much more.

Podcast: Play in new window | Download | Embed

Subscribe: Apple Podcasts | TuneIn | RSS

This episode of the Aerospace Engineering Podcast is brought to you by my patrons on Patreon. Patreon is a way for me to receive regular donations from listeners whenever I release a new episode, and with the help of these generous donors I have been able to pay for much of the expenses, hosting and travels costs that accrue in the production of this podcast. If you would like to support the podcast as a patron, then head over to my Patreon page. There are multiple levels of support, but anything from $1 an episode is highly appreciated. Thank you for your support!

Selected Links from the Episode

- 9T Labs webpage, LinkedIn

- 9T Labs profile | Composites World

- Video: 3D printing for electric cars

- Helicopter door hinge case study

- ETH Zürich research lab

Chris Voorhees is the founder and president of First Mode, a Seattle-based company that is designing and building technology for extreme environments off and on planet Earth.

Chris Voorhees is the founder and president of First Mode, a Seattle-based company that is designing and building technology for extreme environments off and on planet Earth.

Chris has decades of experience in the implementation of robotic systems for the exploration of deep space. His notable experience includes his work as a mobility systems engineer for NASA’s Spirit and Opportunity rovers and lead mechanical engineer for NASA’s Curiosity rover. For his efforts, Chris received NASA’s Exceptional Achievement and Exceptional Engineering Achievement medals.

Today, Chris oversees the design, development, and deployment of engineered solutions for missions around the globe and throughout the solar system. First Mode is also focusing on significant problems on Earth including the challenging issues of sustainability for the natural resources sector.

In this episode of the Aerospace Engineering Podcast, Chris and I talk about:

- his background in engineering, including his time at NASA’s Jet Propulsion Laboratory

- his past work on Mars rovers

- why we should go back to the Moon

- the space projects First Mode is currently involved with

- and First Mode’s growing engagement in the hydrogen sector

Podcast: Play in new window | Download | Embed

Subscribe: Apple Podcasts | TuneIn | RSS

This episode of the Aerospace Engineering Podcast is brought to you by my patrons on Patreon. Patreon is a way for me to receive regular donations from listeners whenever I release a new episode, and with the help of these generous donors I have been able to pay for much of the expenses, hosting and travels costs that accrue in the production of this podcast. If you would like to support the podcast as a patron, then head over to my Patreon page. There are multiple levels of support, but anything from $1 an episode is highly appreciated. Thank you for your support!

Selected Links from the Episode

- First Mode webpage, Twitter, LinkedIn

- First Mode blog

- Curiosity rover, Spirit & Opportunity rovers

- NASA Mars Perseverance rover

- NASA Psyche mission

- NASA Artemis Moon program

- Back to the Moon

- Chris’ NPR interview

- Hydrogen-powered mining trucks

Carl Copeland is the founder of Möbius Aero, an electric air race team, and MμZ Motion, a developer of custom, high-performance electric motors. Carl has built various engineering teams and led innovation in the fields of IT, mechanical, magnetic, and electrical design. He has founded four companies and holds over 25 patents, and his most recent innovation, the Field Modulation Motion System, is a novel electric motor design that is significantly lighter and smaller than established electric motors of similar power and torque ratings.

Carl Copeland is the founder of Möbius Aero, an electric air race team, and MμZ Motion, a developer of custom, high-performance electric motors. Carl has built various engineering teams and led innovation in the fields of IT, mechanical, magnetic, and electrical design. He has founded four companies and holds over 25 patents, and his most recent innovation, the Field Modulation Motion System, is a novel electric motor design that is significantly lighter and smaller than established electric motors of similar power and torque ratings.

The Field Modulation Motion System achieves its high performance by using 18-phase field modulation rather than the three-phase modulation used in standard motors, essentially emulating six separate three-phase motors attached to a single shaft. Carl is putting his new engine design to the test in a new air racing series for electric aircraft known as Air Race E.

In contrast to typical air racing series, in Air Race E aircraft race against each other on a course rather than flying isolated time trials. In the past, air races have been an invaluable means of developing aerospace technology in a competitive setting and Air Race E is re-awakening the spirit of competition by launching the first fully electric airplane race series. In this episode of the Aerospace Engineering Podcast, Carl and I talk about:

- his unique and auto-didactic background in engineering

- his goal of finding practical solutions to humanity’s problems

- the Air Race E competition and the origin story of Carl’s racing team Möbius Aero

- the technical details and benefits of his new electric motor

- and the impact this has on airframe development

Podcast: Play in new window | Download | Embed

Subscribe: Apple Podcasts | TuneIn | RSS

This episode of the Aerospace Engineering Podcast is brought to you by my patrons on Patreon. Patreon is a way for me to receive regular donations from listeners whenever I release a new episode, and with the help of these generous donors I have been able to pay for much of the expenses, hosting and travels costs that accrue in the production of this podcast. If you would like to support the podcast as a patron, then head over to my Patreon page. There are multiple levels of support, but anything from $1 an episode is highly appreciated. Thank you for your support!

Selected Links from the Episode

- Möbius Aero and MμZ Motion webpage

- Möbius YouTube, Twitter, LinkedIn

- Carl speaks to Airbus about Team Möbius

- Air Race E webpage

- Talk on Air Race E | Royal Aero Society

- Electric vs combustion engines | Airbus

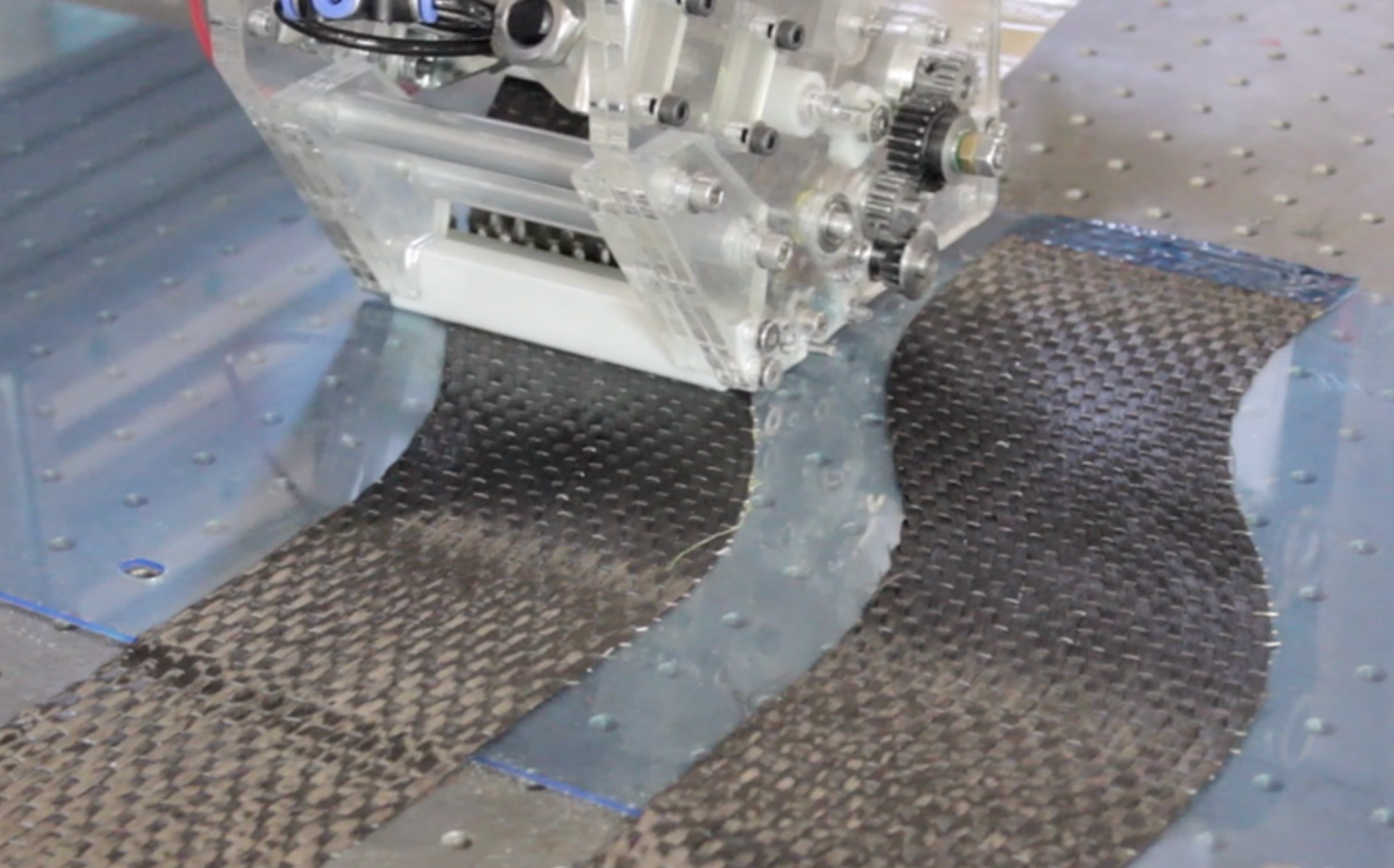

Dr Evangelos Zympeloudis is the CEO and co-founder of iCOMAT, a company based in the UK that is developing automated manufacturing equipment for tow-steered composites. Fibre-reinforced plastics, such as carbon-fibre or glass-fibre composites, hold great promise for high-performance and lightweight design due to their excellent stiffness and strength properties at low material density. Traditional fibre-reinforced plastics are manufactured using straight uni-directional fibres or with straight fibres woven into a fabric.

Dr Evangelos Zympeloudis is the CEO and co-founder of iCOMAT, a company based in the UK that is developing automated manufacturing equipment for tow-steered composites. Fibre-reinforced plastics, such as carbon-fibre or glass-fibre composites, hold great promise for high-performance and lightweight design due to their excellent stiffness and strength properties at low material density. Traditional fibre-reinforced plastics are manufactured using straight uni-directional fibres or with straight fibres woven into a fabric.

Generally speaking, a fibre-reinforced composite derives its strength by aligning the fibres with the direction of the dominant load path. The novelty of tow-steered composites is that strips of composite material, so-called fibre tows, are steered along curvilinear paths such that the fibre direction is not straight, but varies continuously from point to point. This characteristic has benefits in structural design as the reinforcing fibres can now be used to smoothly tailor stiffness and strength throughout the structure. For example, tow-steered composites can be used to curve the reinforcing fibres around windows in an aircraft fuselage in order to improve strength and facilitate net-shape manufacturing.

Generally speaking, a fibre-reinforced composite derives its strength by aligning the fibres with the direction of the dominant load path. The novelty of tow-steered composites is that strips of composite material, so-called fibre tows, are steered along curvilinear paths such that the fibre direction is not straight, but varies continuously from point to point. This characteristic has benefits in structural design as the reinforcing fibres can now be used to smoothly tailor stiffness and strength throughout the structure. For example, tow-steered composites can be used to curve the reinforcing fibres around windows in an aircraft fuselage in order to improve strength and facilitate net-shape manufacturing.

In this episode of the Aerospace Engineering Podcast, Evangelos and I talk about:

- his background as an engineer and entrepreneur

- the manufacturing challenge of making defect-free tow-steered composites

- the capabilities of iCOMAT’s rapid tow-shearing process

- the benefits of tow-steering for manufacturing cost and design

- and some of the projects iCOMAT is currently working on

Podcast: Play in new window | Download | Embed

Subscribe: Apple Podcasts | TuneIn | RSS

This episode of the Aerospace Engineering Podcast is brought to you by my patrons on Patreon. Patreon is a way for me to receive regular donations from listeners whenever I release a new episode, and with the help of these generous donors I have been able to pay for much of the expenses, hosting and travels costs that accrue in the production of this podcast. If you would like to support the podcast as a patron, then head over to my Patreon page. There are multiple levels of support, but anything from $1 an episode is highly appreciated. Thank you for your support!

Disclosure: I currently work with iCOMAT on a number of projects and am a consultant to the company.

Selected Links from the Episode

- iCOMAT webpage, LinkedIn

- iCOMAT’s technology

- Video of the Rapid Tow-Shearing process

- Tow-steered composites overview:

Stefan Brieschenk is the Chief Operating Officer of Rocket Factory Augsburg (RFA), a company in the south of Germany that is developing a low-cost launch vehicle. RFA’s vision is to drastically reduce the cost of access to space through large-scale industrialisation of their operations and manufacturing.

Stefan Brieschenk is the Chief Operating Officer of Rocket Factory Augsburg (RFA), a company in the south of Germany that is developing a low-cost launch vehicle. RFA’s vision is to drastically reduce the cost of access to space through large-scale industrialisation of their operations and manufacturing.

Key to RFA’s design approach is a holistic performance and cost optimisation tool that has been developed in collaboration with space industry veterans MT Aerospace and OHB. This approach has led to interesting design choices. For example, the second stage tank is based on inexpensive stainless steel construction, and in places where composite materials are being used, RFA is relying on automotive grade materials that have already been used in high-volume production. In their propulsive system, however, RFA is chasing the highest performance—a closed-cycle staged combustion engine, enabled by modern manufacturing capabilities in 3D printing and which is due to be hot-fired early next year.

In this episode of the Aerospace Engineering podcast, Stefan and I talk about:

- Stefan’s passion for rocketry and hypersonic flight

- his background at Rocket Lab and MT Aerospace

- the gap between the European and US space sectors

- RFA’s launch vehicle and design approach

- and Stefan’s vision for the European space sector

Podcast: Play in new window | Download | Embed

Subscribe: Apple Podcasts | TuneIn | RSS

This episode of the Aerospace Engineering Podcast is brought to you by my patrons on Patreon. Patreon is a way for me to receive regular donations from listeners whenever I release a new episode, and with the help of these generous donors I have been able to pay for much of the expenses, hosting and travels costs that accrue in the production of this podcast. If you would like to support the podcast as a patron, then head over to my Patreon page. There are multiple levels of support, but anything from $1 an episode is highly appreciated. Thank you for your support!

Selected Links from the Episode

- RFA webpage, LinkedIn, Twitter

- Launch vehicle

- Partners: MT Aerospace & OHB

- Ten questions for RFA

- Staged combustion engine

- In the news:

- Launch site in Andøya

- ESA support for RFA

Marc Ausman is the co-founder and CEO of Airflow, a California-based startup that is building an electric short-haul cargo aircraft. Marc holds a commercial pilot license, and among other endeavours, was previously the Chief Strategist for Airbus’ all-electric, tilt-wing vehicle demonstrator known as Vahana. Alongside four other former Vahana team members, Marc and the team at Airflow are building an aerial logistics network to move short-haul cargo quickly and cost effectively by using unused airspace around cities.

Marc Ausman is the co-founder and CEO of Airflow, a California-based startup that is building an electric short-haul cargo aircraft. Marc holds a commercial pilot license, and among other endeavours, was previously the Chief Strategist for Airbus’ all-electric, tilt-wing vehicle demonstrator known as Vahana. Alongside four other former Vahana team members, Marc and the team at Airflow are building an aerial logistics network to move short-haul cargo quickly and cost effectively by using unused airspace around cities.

Key to Airflow’s vision is electric short takeoff and landing (eSTOL). Airflow’s eSTOL aircraft require only a few hundred feet for takeoff and landing—about the length of a football field—which means that runways can be built almost anywhere, even under existing regulations. What is more, even larger rooftops that can fit more than three conventional helipads could feasibly be used as a runway. Given the aerodynamic efficiency advantages of fixed-wing aircraft over rotary vertical take-off and landing (VTOL) aircraft, Airflow have come-up with an interesting alternative concept to many other companies in the growing urban mobility sector.

So in this episode of the Aerospace Engineering Podcast, Marc and I talk about:

- Airflow’s vision of building the urban logistics network of the future

- some of the misconceptions of eSTOL and eVTOL

- the advantages of electric powertrains beyond reducing emissions

- the technology Airflow is developing and challenges that need to be overcome

- and striking a balance between financial and engineering incentives

Podcast: Play in new window | Download | Embed

Subscribe: Apple Podcasts | TuneIn | RSS

This episode of the Aerospace Engineering Podcast is brought to you by my patrons on Patreon. Patreon is a way for me to receive regular donations from listeners whenever I release a new episode, and with the help of these generous donors I have been able to pay for much of the expenses, hosting and travels costs that accrue in the production of this podcast. If you would like to support the podcast as a patron, then head over to my Patreon page. There are multiple levels of support, but anything from $1 an episode is highly appreciated. Thank you for your support!

Selected Links from the Episode

Dr John Williams is an engineer at Lumentum where he works on the extreme challenges of sub-millimetre scale photonic circuits. For the purpose of this conversation, however, we will be discussing John’s former role as a design engineer at Reaction Engines, a UK company that is developing the Synergetic Air-Breathing Rocket Engine, also known as SABRE.

Dr John Williams is an engineer at Lumentum where he works on the extreme challenges of sub-millimetre scale photonic circuits. For the purpose of this conversation, however, we will be discussing John’s former role as a design engineer at Reaction Engines, a UK company that is developing the Synergetic Air-Breathing Rocket Engine, also known as SABRE.

The vision of SABRE is to build a new hypersonic engine that can operate both as an air-breathing jet engine and as a traditional rocket. This versatility means SABRE can be used as a propulsive platform for future hypersonic aircraft or to propel space planes into orbit. Furthermore, SABRE combines the unique fuel efficiency of a jet engine with the power and high-speed ability of a rocket. Having started at Reaction Engines early on when there were only two people in the design office, and later founding his own design and manufacturing company, John has many years of high-tech experience in the aerospace sector.

In this episode of the Aerospace Engineering podcast, John and I talk about:

- his background as an aerospace engineer

- the benefits of an air-breathing rocket engine

- the particular design challenges in realising this type of engine

- and his lessons learned from high-tech development

Podcast: Play in new window | Download | Embed

Subscribe: Apple Podcasts | TuneIn | RSS

This episode of the Aerospace Engineering Podcast is brought to you by my patrons on Patreon. Patreon is a way for me to receive regular donations from listeners whenever I release a new episode, and with the help of these generous donors I have been able to pay for much of the expenses, hosting and travels costs that accrue in the production of this podcast. If you would like to support the podcast as a patron, then head over to my Patreon page. There are multiple levels of support, but anything from $1 an episode is highly appreciated. Thank you for your support!

Selected Links from the Episode

- Reaction Engines webpage

- The SABRE engine: REL, WIRED, Wikipedia

- Precooler test at Mach 5

- The Three Rocketeers BBC documentary

- Lecture by Alan Bond, co-founder of Reaction Engines

- Carbon nanotube composites

Dr Sanjiv Singh is a research professor at the Robotics Institute of Carnegie Mellon University and the CEO of Near Earth Autonomy. Sanjiv has more than 30 years of research experience in the field of autonomous vehicles and has spun-out multiple companies from his university research.

Dr Sanjiv Singh is a research professor at the Robotics Institute of Carnegie Mellon University and the CEO of Near Earth Autonomy. Sanjiv has more than 30 years of research experience in the field of autonomous vehicles and has spun-out multiple companies from his university research.

His current venture, Near Earth Autonomy, develops technology that allows aircraft to autonomously take-off, fly, and land safely, with or without GPS. Near Earth’s goal is to develop complete autonomous solutions that improve efficiency, performance, and safety for aircraft ranging from small drones up to full-size helicopters. The team at Near Earth was awarded the 2018 Howard Hughes Award, which recognises outstanding improvements in fundamental helicopter technology, and was also a 2017 finalist for the Collier Trophy, one of the most important aviation awards worldwide. In this episode of the Aerospace Engineering Podcast, Sanjiv and I talk about:

- his background as a researcher in the field of robotics and autonomy

- the fundamental concepts of autonomy

- the hardware and software that make it work

- the successful helicopter technology demonstrator Near Earth Autonomy has developed

- and the future of autonomous vehicles

Podcast: Play in new window | Download | Embed

Subscribe: Apple Podcasts | TuneIn | RSS

This episode of the Aerospace Engineering Podcast is brought to you by my patrons on Patreon. Patreon is a way for me to receive regular donations from listeners whenever I release a new episode, and with the help of these generous donors I have been able to pay for much of the expenses, hosting and travels costs that accrue in the production of this podcast. If you would like to support the podcast as a patron, then head over to my Patreon page. There are multiple levels of support, but anything from $1 an episode is highly appreciated. Thank you for your support!

Selected Links from the Episode

- Near Earth Autonomy webpage, Twitter & YouTube

- Dr Sanjiv Singh’s TEDx talk

- Near Earth collaborates with Kaman | Vertical

- Public perception of autonmous flying | WIRED

- Lidar vs cameras vs radar | WIRED

In this episode I am speaking to Aaron Daniel and Peter Shpik of Alpine Advanced Materials. Alpine Advanced Materials specialises in the design and manufacture of custom-engineered parts and products for demanding aerospace and energy applications. The company is currently commercialising a high-performance material known as HX5™, which is a thermoplastic nanocomposite originally developed by Lockheed Martin Skunk Works® over a decade of testing and validation.

In this episode I am speaking to Aaron Daniel and Peter Shpik of Alpine Advanced Materials. Alpine Advanced Materials specialises in the design and manufacture of custom-engineered parts and products for demanding aerospace and energy applications. The company is currently commercialising a high-performance material known as HX5™, which is a thermoplastic nanocomposite originally developed by Lockheed Martin Skunk Works® over a decade of testing and validation.

HX5™ was originally developed to replace aluminum at half the weight but with the same strength and stiffness. On top of that HX5™ has excellent durability in harsh environments such as in outer space, in radioactive settings or around aggressive chemicals. As a result, this new nanocomposite material is already being used on jet fighters, high-speed helicopters, UAVs, rockets, and satellites. In this episode of the aerospace engineering podcast Aaron, Peter and I talk about:

- the importance of lightweighting in the aerospace industry

- the development history of HX5™

- what exactly HX5™ is and its unique properties

- where and how HX5™ is currently being used

Podcast: Play in new window | Download | Embed

Subscribe: Apple Podcasts | TuneIn | RSS

This episode of the Aerospace Engineering Podcast is brought to you by my patrons on Patreon. Patreon is a way for me to receive regular donations from listeners whenever I release a new episode, and with the help of these generous donors I have been able to pay for much of the expenses, hosting and travels costs that accrue in the production of this podcast. If you would like to support the podcast as a patron, then head over to my Patreon page. There are multiple levels of support, but anything from $1 an episode is highly appreciated. Thank you for your support!

Selected Links from the Episode

- Alpine Advanced Materials webpage, Twitter & LinkedIn

- HX5™ white paper

- HX5™ case studies

- What is a nanocomposite?

In this episode I am speaking to Damian Jamroz and Grzegorz Marzec of the Polish NewSpace company SatRevolution. The company was founded in 2016 and specialises in real-time earth observation for civilian and military applications.

In this episode I am speaking to Damian Jamroz and Grzegorz Marzec of the Polish NewSpace company SatRevolution. The company was founded in 2016 and specialises in real-time earth observation for civilian and military applications.

SatRevolution has launched three satellites to date, with the last launch occurring at the beginning of September 2020 on an Arianespace Vega rocket, while the next one is planned for December 2020 on a SpaceX Falcon 9 rocket. These satellites are all milestones towards building an Earth-observation constellation that will be operational from 2023.

Recently, SatRevolution has focused on developing the STORK platform, which is scheduled to be launched in June 2021. The goal of STORK is to develop a shared-services capability so that multiple satellites can be launched within one platform and benefit from SatRevolution’s Earth-observation capabilities. Hence, SatRevolution will focus on designing, manufacturing and integrating the platform satellite, while their customers and external partners can focus on work related to development of their own technologies and experiments. In this wide-ranging episode of the Aerospace Engineering Podcast we talk about:

- the history of SatRevolution

- why Earth-observation satellites are such a hot topic at the moment

- the details of SatRevolution’s previous satellites and the upcoming STORK mission

- how SatRevolution is using AI for earth observation

- and what the future holds for the company.

Podcast: Play in new window | Download | Embed

Subscribe: Apple Podcasts | TuneIn | RSS

This episode of the Aerospace Engineering Podcast is brought to you by my patrons on Patreon. Patreon is a way for me to receive regular donations from listeners whenever I release a new episode, and with the help of these generous donors I have been able to pay for much of the expenses, hosting and travels costs that accrue in the production of this podcast. If you would like to support the podcast as a patron, then head over to my Patreon page. There are multiple levels of support, but anything from $1 an episode is highly appreciated. Thank you for your support!

Selected Links from the Episode

- SatRevolution webpage, Twitter & LinkedIn

- The STORK platform:

- Earth-observation satellites

- SatRevolution’s previous missions

- SatRevolution partners with Momentus (previously on this podcast)

Sign-up to the monthly Aerospaced newsletter

Recent Posts

- Podcast Ep. #49 – 9T Labs is Producing High-Performance Composite Materials Through 3D Printing

- Podcast Ep. #48 – Engineering Complex Systems for Harsh Environments with First Mode

- Podcast Ep. #47 – Möbius Aero and MμZ Motion: a Winning Team for Electric Air Racing

- Podcast Ep. #46 – Tow-Steered Composite Materials with iCOMAT

- Podcast Ep. #45 – Industrialising Rocket Science with Rocket Factory Augsburg

Topics

- 3D Printing (4)

- Aerodynamics (29)

- Aerospace Engineering (11)

- Air-to-Air Refuelling (1)

- Aircraft (16)

- Autonomy (2)

- Bio-mimicry (9)

- Case Studies (15)

- Composite Materials (25)

- Composites (7)

- Computational Fluid Dynamics (2)

- Contra-Rotation (1)

- Design (2)

- Digitisation (2)

- Drones (1)

- Education (1)

- Electric Aviation (11)

- Engineering (23)

- General Aerospace (28)

- Gliders (1)

- Helicopters (3)

- History (26)

- Jet Engines (4)

- Machine Learning (4)

- Manufacturing (12)

- Military (2)

- Modelling (2)

- Nanomaterials (2)

- NASA (2)

- New Space (11)

- News (3)

- Nonlinear Structures (1)

- Novel Materials/Tailored Structures (14)

- Personal Aviation (5)

- Podcast (45)

- Propulsion (9)

- Renewable Energy (2)

- Renewables (1)

- Rocket Science (17)

- Satellites (8)

- Shape Adaptation (1)

- Smart Materials (1)

- Space (12)

- Space Junk (1)

- Sport Airplanes (2)

- Startup (19)

- STOL (1)

- Structural Efficiency (5)

- Structural Mechanics (1)

- Superalloys (1)

- Supersonic Flight (2)

- Technology (18)

- UAVs (2)

- Virtual Reality (2)

- VTOL (3)

- Privacy & Cookies: This site uses cookies. By continuing to use this website, you agree to their use.

To find out more, including how to control cookies, see here: Cookie Policy